Content

A Guide to Audio to Text AI Technology

A Guide to Audio to Text AI Technology

October 5, 2025

Think of a digital stenographer that listens to any conversation, meeting, or voice note and instantly types out every single word. That's the essence of audio to text AI — a technology that turns spoken language into written, searchable text. It's the magic behind your favorite voice assistants and the engine that automatically generates your meeting summaries.

From Sound Waves to Searchable Text

This process of converting sound into digital words isn't just a neat trick anymore. It's become a critical tool for professionals in all sorts of fields, saving countless hours and unlocking the valuable information that used to be stuck inside audio recordings.

At the heart of it all is a process called Automatic Speech Recognition (ASR). You can think of ASR as the AI's ears and brain working in perfect sync. It listens intently to sound patterns, breaks them down into the smallest phonetic sounds, and then stitches them together into words and sentences that make sense.

The Growing Demand for Voice Data

The real-world uses for this technology are popping up everywhere, and the market growth reflects that. The global speech-to-text API market was valued at roughly USD 4.42 billion in 2025 and is expected to surge to USD 8.57 billion by 2030. That’s nearly double in just five years, showing just how quickly businesses in media, education, and healthcare are adopting it.

You can see the impact most clearly in highly specialized fields. For instance, doctors and nurses are now using AI to capture patient notes, which frees them up from hours of clunky administrative work. If you're curious about how this works in a real-world medical setting, Simbie AI has a great resource on AI medical transcription.

At its core, audio to text AI is a productivity multiplier. It takes the most natural form of human communication—speech—and makes it as easy to edit, search, and analyze as any written document.

In this guide, we’ll break down exactly how this technology works, what makes it accurate (or inaccurate), and how you can start using it to turn spoken ideas into powerful, actionable text.

How AI Learns to Understand Speech

Think about how a person learns a new language. At first, it's all just a jumble of sounds. Over time, you start to pick out the basic building blocks—the individual sounds, or phonemes—like the "k" sound in "cat" or the "sh" sound in "shoe."

An AI starts its journey in a very similar way. It doesn't actually hear "words." It receives a digital stream of sound waves, and its first job is to break that complex audio down into phonetic components it can recognize. This is the first step in a process called Automatic Speech Recognition (ASR), which is basically the AI's digital ear.

The Two Pillars of Understanding

Now, ASR is great at turning raw sound into a sequence of words, but that's only half the battle. A list of words isn't the same as understanding. For instance, the phrase "I scream for ice cream" sounds almost identical to "ice cream for I scream," but one is a common saying and the other is just nonsense.

This is where the second pillar, Natural Language Processing (NLP), steps in. If ASR is the ears, NLP is the brain. NLP models are trained on massive datasets of text—think books, articles, and countless websites. This is how they learn the rules of grammar, context, and the subtle relationships between words. It’s how the AI knows "ice cream" is a common pairing and understands what a typical sentence should look like.

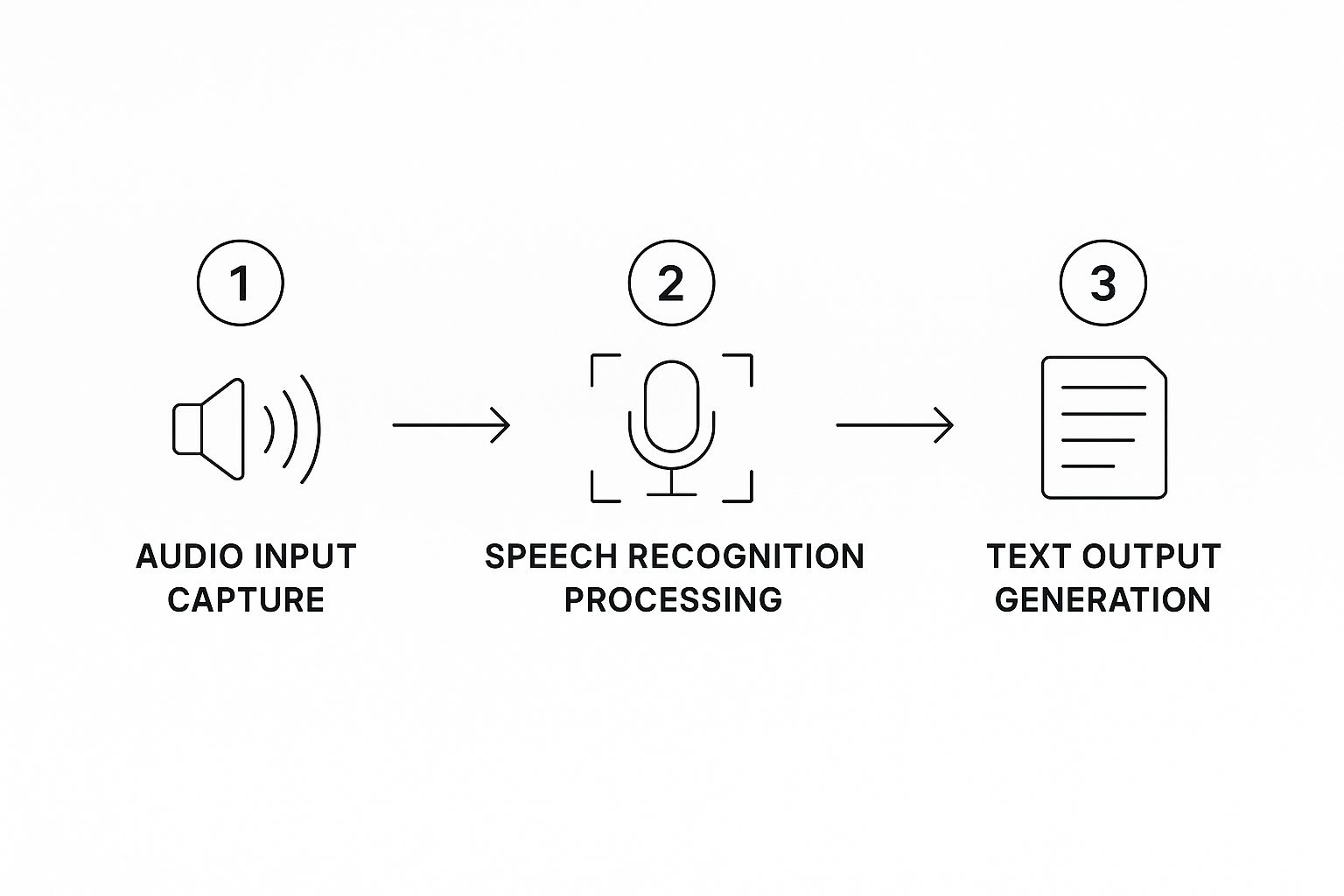

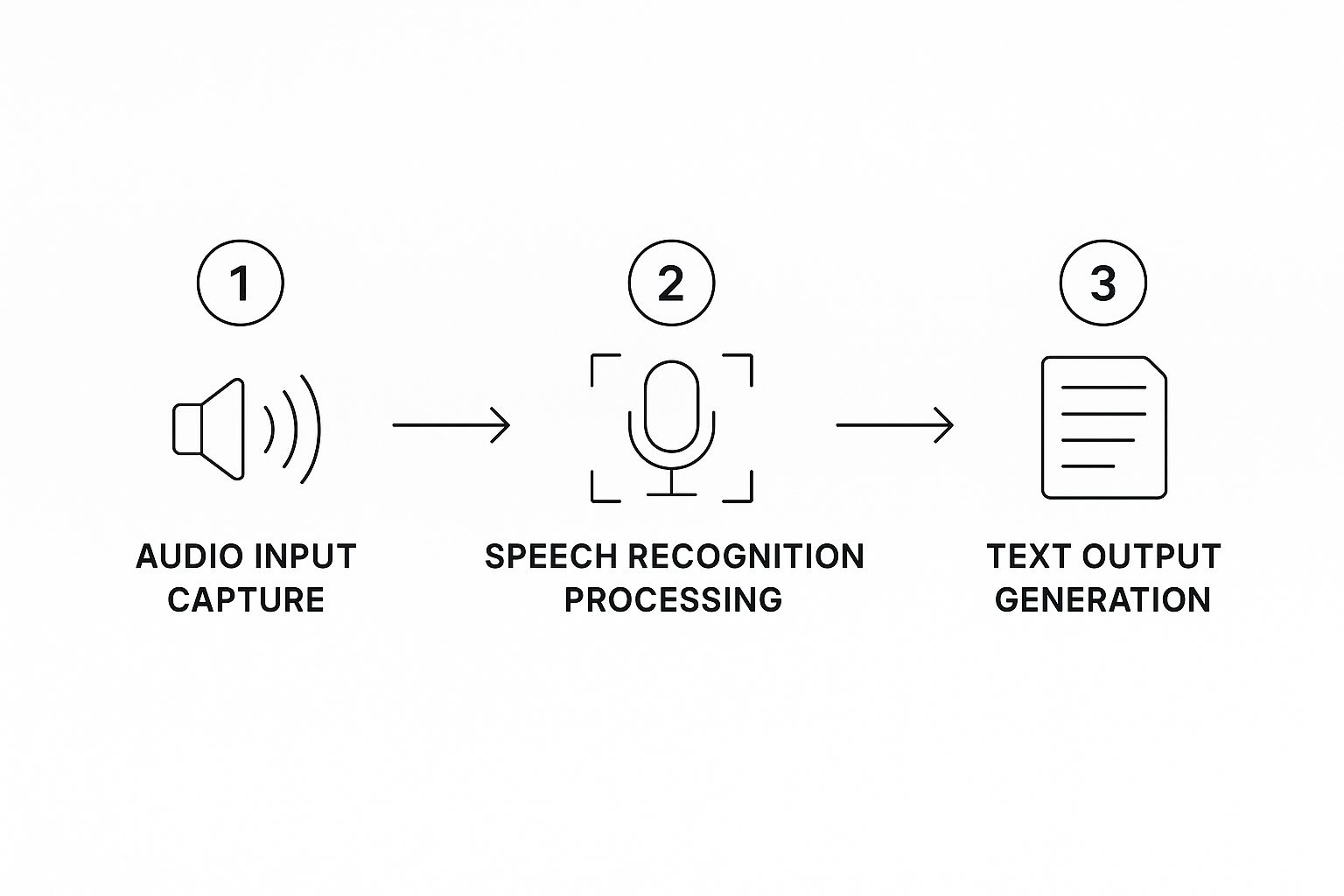

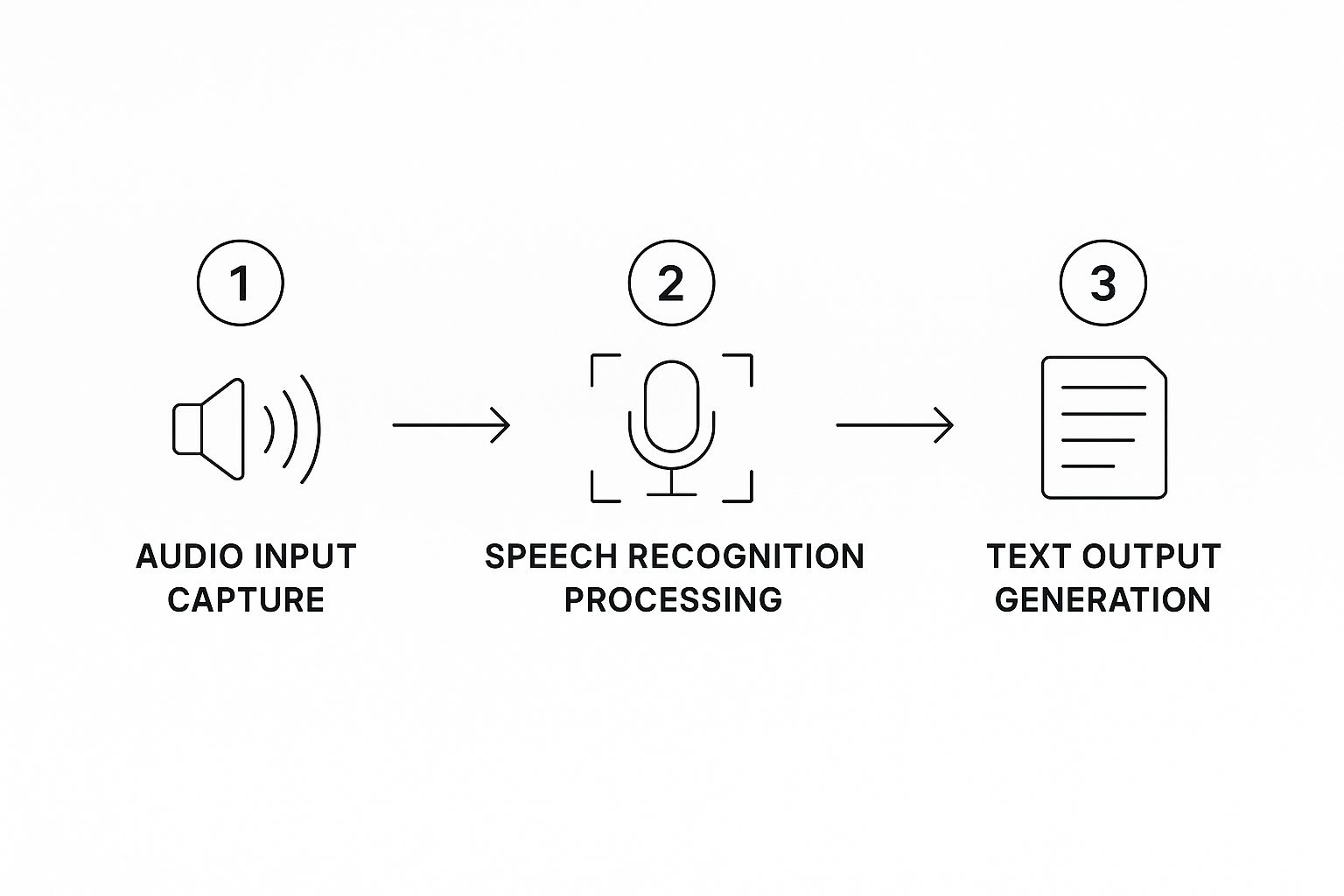

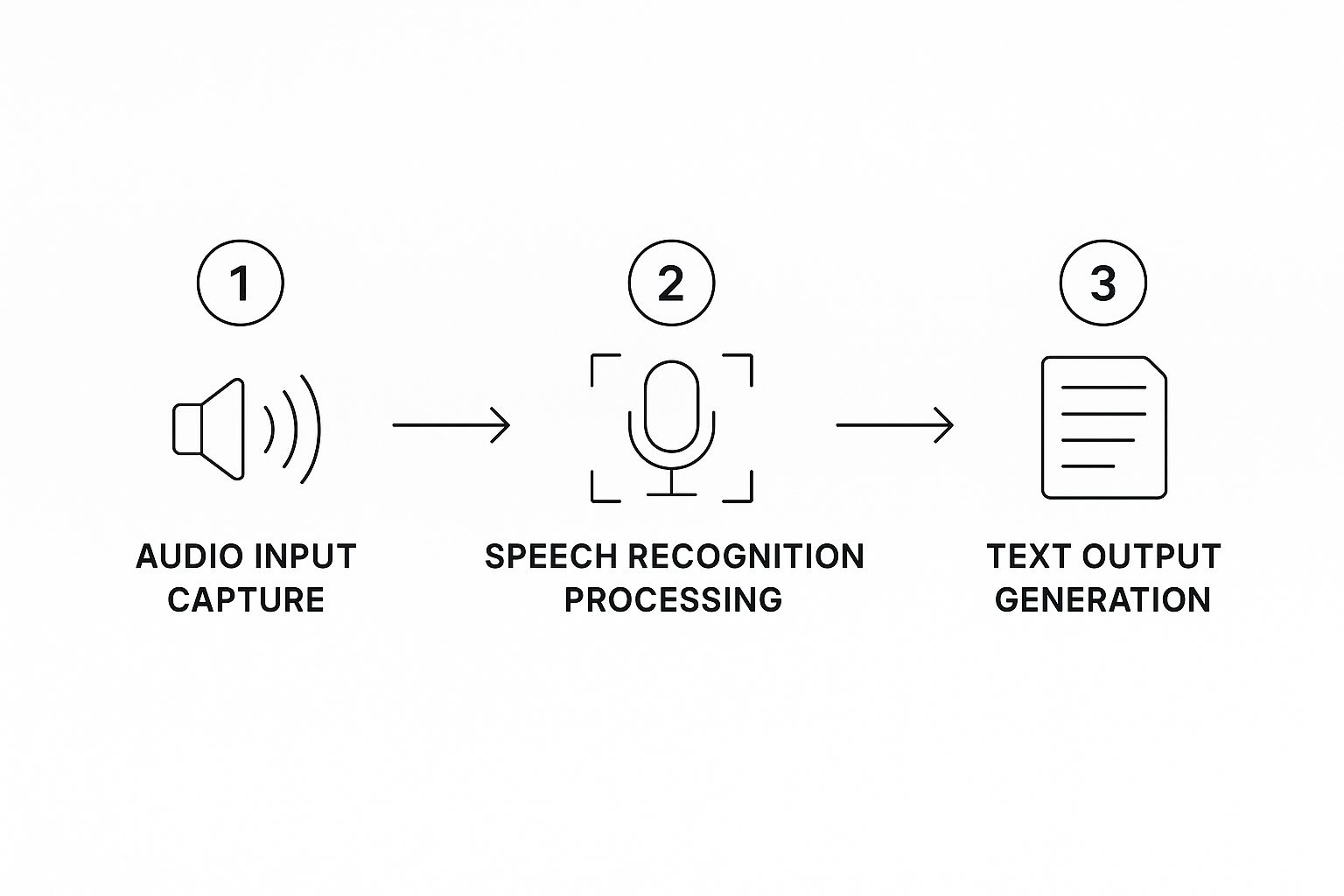

The infographic below gives you a bird's-eye view of how these two systems work in tandem to turn spoken words into clean, coherent text.

This chart helps visualize the journey from a simple soundwave all the way to structured text, highlighting the different stages of capture, processing, and final output.

From Sounds to Sentences

So what does this look like in practice? When you speak into a device, the ASR model gets to work immediately.

Sound Segmentation: First, the AI chops the audio waveform into tiny, millisecond-long slices. Each one has distinct sound properties.

Phoneme Matching: The model then analyzes these slices and matches them to the most likely phonemes it knows from its vast training data.

Word Assembly: Finally, it starts stringing these phonemes together to form potential words. Here's where it gets tricky. Is the speaker saying "recognize speech" or "wreck a nice beach"? Phonetically, they're nearly identical.

This is where the AI's real intelligence kicks in. The NLP component steps up to evaluate the different word combinations based on pure context.

The AI doesn't just hear sounds; it calculates probabilities. After analyzing billions of sentences, it knows that "recognize speech" is a far more common and logical phrase, making it the most probable transcription.

This predictive power is what enables a modern audio to text AI to navigate homophones, slang, and complex sentences with such surprising accuracy. At every step, the system is making an educated guess, constantly refining its output based on what it knows about real human communication. It's this powerful combination—ASR for listening and NLP for understanding—that turns a chaotic stream of sound into structured, meaningful text.

Why AI Transcription Accuracy Varies

You've probably noticed that the accuracy of an audio to text AI can feel like a moving target. One day it’s flawless, the next it’s full of mistakes. The reason is simple: not all audio is created equal. While the best AI models boast over 95% accuracy, that number assumes you're feeding them pristine, studio-quality sound. In the real world, the AI’s performance is a direct reflection of the audio quality it has to work with.

The core of the issue is a classic signal-to-noise problem. The words you want transcribed are the “signal.” Everything else—the humming air conditioner, the person coughing in the background, multiple people talking at once—is “noise.” The more noise you have, the harder it is for the AI to pick out the signal.

Even things you might not think about can throw the AI for a loop. A speaker standing too far from the mic, using a cheap headset, or talking in a room with a bad echo can all distort the audio just enough to confuse the system’s pattern-matching algorithms.

Key Factors That Influence Accuracy

A handful of specific variables can make or break your transcription quality. Getting a handle on these is the first step to getting cleaner, more reliable results.

Audio Clarity: This is the big one. Clear audio from a good microphone placed close to the speaker will always outperform a recording made on a phone sitting in the middle of a noisy conference room.

Background Noise: Competing sounds are the AI’s kryptonite. A bustling coffee shop, passing sirens, or even quiet music can muddy the phonetic details the AI relies on, causing it to mishear or skip words entirely.

Speaker Overlap: When people talk over each other, their sound waves get mashed together into a confusing mess. Even the smartest AI systems struggle to untangle that jumble into separate, coherent sentences.

Accents and Pacing: Modern AI is trained on a huge variety of speech patterns, but heavy accents, regional slang, or speaking exceptionally fast can still be a challenge. If the speech deviates too much from the patterns the AI knows, mistakes are more likely.

The table below breaks down these common factors, explaining their direct impact and what you can do to manage them.

Factors Impacting Audio to Text AI Accuracy

Factor | Impact on Accuracy | How to Optimize |

|---|---|---|

Audio Quality | Low-quality recordings with distortion or low bitrates are difficult for an AI to interpret. | Use a high-quality microphone and record in a format like WAV or FLAC for best results. |

Background Noise | Competing sounds from traffic, music, or other conversations can obscure the primary speaker's words. | Record in a quiet, controlled environment. Use noise-canceling microphones if possible. |

Speaker Clarity | Mumbling, fast speech, or inconsistent volume levels can lead to transcription errors. | Encourage speakers to enunciate clearly and speak at a moderate, consistent pace. |

Accents & Dialects | Strong, unfamiliar accents may not be well-represented in the AI's training data, causing misinterpretations. | Choose an AI model that specifies training on diverse accents or allows for accent adaptation. |

Specialized Jargon | Technical, medical, or legal terms that aren't in the AI’s general vocabulary will likely be transcribed incorrectly. | Use a transcription service with custom vocabulary features or one specifically trained for your industry. |

Speaker Overlap | When multiple people speak at once, the AI struggles to separate the different voices and words. | Encourage speakers to take turns. Use multi-channel recording to isolate each speaker's audio track. |

As you can see, a little bit of prep work on the front end can make a huge difference in the final transcript.

Dealing With Specialized Terminology

Another major hurdle for a general-purpose AI is industry-specific jargon. An AI trained on everyday conversations isn't going to recognize complex legal terms, scientific names, or company-specific acronyms. Instead, it will just guess the closest phonetic match it knows.

The AI transcribes what it thinks it hears based on probability. If it has never encountered the term "brachiocephalic artery," it might transcribe it as "break you cephalic artery" because those are more common words in its training data.

This is a massive problem in fields like healthcare, where one wrong word can have serious consequences. For these situations, you need an AI that's been specifically trained on that vocabulary. Our guide on speech to text medical transcription dives deeper into how these specialized systems are built for precision.

By understanding these variables, you can start taking practical steps—like using better mics, finding a quiet room, and asking people to speak one at a time—to dramatically improve the accuracy of any transcription tool you use.

Putting Audio to Text AI into Practice

It's one thing to talk about the theory, but the real magic of audio to text AI happens when you see it solving actual problems in the real world. This isn't just a neat trick anymore. Across different fields, it's becoming a fundamental tool that changes how we work with spoken information, driving real productivity and opening up new possibilities.

The proof is in the numbers. The market for AI transcription is already a big deal, sitting at around USD 4.5 billion in 2024. And it's not slowing down. Forecasts predict it will balloon to nearly USD 19.2 billion by 2034. Why? Because industries like healthcare, law, and media are relying on it more and more to get things done faster and more accurately.

Transforming Media and Content Creation

Think about a media company sitting on a mountain of video and audio archives. For years, that content was "dark data"—valuable, but almost impossible to search. If a journalist needed to find a specific quote from hundreds of hours of interviews, they were stuck listening manually, sometimes for days on end.

Now, an audio to text AI can churn through that entire library in a tiny fraction of the time, creating a completely searchable text database. Suddenly, content creators can:

Pinpoint key moments instantly: Find a specific quote or topic in seconds, not hours.

Create subtitles and captions: This makes video content accessible to everyone and gives it a huge SEO boost by making spoken words readable to search engines.

Repurpose content with ease: Pull text highlights from a podcast to quickly create blog posts, social media updates, or promotional snippets.

Revolutionizing Healthcare and Legal Fields

In high-stakes professions, every minute and every detail counts. Doctors and lawyers have always been swamped with documentation, often spending their evenings just typing up notes from the day.

AI dictation completely flips that script. A doctor can now speak patient notes directly into an electronic health record (EHR) between appointments. The AI does the typing, freeing them up to focus on patients and cutting down on burnout.

It’s a similar story for legal teams. They can automatically transcribe hours of depositions or courtroom recordings.

Finding a single, critical phrase buried in a 10-hour testimony used to take a paralegal days of tedious work. Now, it's as simple as a keyword search. This drastically speeds up case preparation and can reveal crucial details that might have otherwise been overlooked.

For anyone who relies on in-depth interviews, our guide on using transcription for research provides some great methods for turning all that audio into data you can actually analyze.

Enhancing Accessibility and Customer Experience

This technology isn't just for internal workflows. Companies are using it to make their services more inclusive and responsive. You can see great examples of AI voice technology in action by learning What Is an AI Receptionist?, which breaks down how these systems work in everyday communication.

Think about it: AI can provide real-time transcripts for customer support calls, giving agents a live script to reference so no detail gets missed. The same technology offers live captioning for webinars and online events, opening them up to people who are deaf or hard of hearing. From media vaults to medical charts, audio to text AI is finally unlocking the value that was trapped in spoken words.

How to Choose the Right AI Transcription Tool

Picking the right audio to text AI tool can feel like a chore, but it doesn't have to be. The secret is to stop looking for the "best" one-size-fits-all solution and start focusing on what you actually need. When you match the tool to your specific workflow, you'll find something that not only gets the job done but actually makes your life easier.

First things first, what are you trying to accomplish? A journalist transcribing a sensitive interview has very different needs than a student recording a lecture. A researcher analyzing focus group data needs features a developer documenting a team meeting might not. Pinpointing your main goal is the single most important step.

Assess Core Features and Accuracy

With your goal in mind, you can start digging into the details. Let's be honest—not all transcription tools are created equal. Some are built for lightning-fast turnarounds, while others are fine-tuned to pull clear dialogue out of a noisy background. Your job is to find the features that solve your biggest headaches.

Here are a few things I always tell people to look for:

Speaker Identification: Can the tool tell who is speaking and when? If you're dealing with interviews, meetings, or panel discussions, this is an absolute must-have.

Custom Vocabulary: This one is a game-changer. If you work in a specialized field like medicine, law, or engineering, the ability to teach the AI specific names, acronyms, and jargon will drastically boost its accuracy.

Timestamping: Does the AI mark when words are spoken? Word-level timestamps are a lifesaver for video editors and podcasters who need to sync text perfectly with the audio.

Integration Capabilities: How easily does this tool slide into your current setup? Check for direct connections to the apps you already use, like Zoom, Google Drive, or whatever is central to your work.

Choosing a tool is like hiring an assistant. You wouldn't hire a generalist for a highly specialized task. Match the AI’s skills—like custom vocabulary or speaker diarization—to the specific job you need it to do.

Compare Pricing Models and Scalability

Finally, let's talk about money. Most AI transcription services use one of a few common pricing models. A pay-as-you-go plan is great if you only need transcriptions here and there. For more consistent work, a monthly subscription almost always offers a better deal. Others charge a flat rate per minute or hour, which keeps your billing predictable.

Always check the details. Some plans might look great but have hidden caps on transcription hours or lock advanced features behind a higher paywall. For a really thorough comparison of what's out there, a good guide on the best AI transcription software can save you a ton of research time. Ultimately, you want a tool that not only fits your budget today but can also grow with you down the road.

The Future of AI Voice Technology

The ability of an audio to text AI to turn our spoken words into text is really just the beginning. The truly exciting part is what comes next. These systems are quickly evolving from digital stenographers into something far more sophisticated—tools that can grasp the meaning, intent, and even the emotion behind what we say.

This shift takes voice technology beyond simple transcription and into the realm of genuine comprehension. Think about a future where an AI doesn't just hear your words but understands how you're saying them. That’s where this is all headed, with emerging capabilities designed to analyze tone, sentiment, and other emotional cues.

Moving Beyond Words to Meaning

The next generation of voice AI is set to unlock a much richer layer of communication data. For example, instead of getting just a flat text log of a customer service call, a business could receive an instant analysis of that customer's satisfaction level, all based on the subtle shifts in their tone of voice.

This opens up a whole new world of smarter, more empathetic applications.

Real-Time Language Translation: Imagine having a totally natural conversation with someone who speaks a different language, with an AI translating for both of you instantly, right in the middle of a live call.

Sentiment Analysis: New tools could automatically flag frustration or delight in a customer's voice, giving support teams the cues they need to respond more effectively before a situation escalates.

Emotional Intelligence: Your virtual assistant might one day recognize from your voice that you’re stressed or tired and adjust its responses to be more helpful and supportive.

The goal is to create systems that can engage in more human-like conversations. It's about shifting from simply processing words to interpreting intent, emotion, and context.

This leap forward is fueling explosive market growth. The audio AI recognition market is on track to jump from USD 5.23 billion in 2024 to a staggering USD 19.63 billion by 2033. According to these market trends in audio AI, this growth is largely driven by these advanced new capabilities. As these tools get better at understanding us, they'll show up everywhere—from creating hyper-personalized user experiences to powering security systems that recognize your unique voiceprint.

Let's Tackle Some Common Questions About Audio-to-Text AI

When you're new to the world of AI transcription, a few questions almost always pop up. People are naturally curious about how well it actually works, what it can handle, and—perhaps most importantly—whether their data is safe. Let's break those down.

The big one is always accuracy. You'll often see claims of over 95% accuracy, and while that's achievable, it's really a best-case scenario. Think crystal-clear audio, a single speaker with a standard accent, and zero background noise. The real world is much messier, and things like thick accents, industry-specific jargon, or people talking over each other will inevitably lower that number.

What About Different Languages and Data Security?

"Can it understand my specific dialect or a different language?" That's another frequent question, and the answer is a resounding yes. The best tools are trained on an enormous amount of diverse audio data from all over the world. This allows them to get surprisingly good at recognizing a huge range of languages and accents. Some services even let you give the AI a heads-up on the language, which helps it nail the transcription.

Finally, let's talk about the elephant in the room: what happens to your audio files?

Any trustworthy audio-to-text provider will make security a top priority. This means your data is protected with strong encryption, both when you're uploading it and while it's stored on their servers. While most services comply with major privacy laws like GDPR, it’s always a smart move to quickly scan their privacy policy before you upload anything sensitive.

Getting a handle on these key points—the reality of accuracy, language support, and security protocols—is the best way to find a tool that genuinely fits what you need to do.

Ready to turn your spoken ideas into polished text in seconds? VoiceType AI helps you write up to nine times faster inside any application with 99.7% accuracy. Try it for free and see how much time you can save.

Think of a digital stenographer that listens to any conversation, meeting, or voice note and instantly types out every single word. That's the essence of audio to text AI — a technology that turns spoken language into written, searchable text. It's the magic behind your favorite voice assistants and the engine that automatically generates your meeting summaries.

From Sound Waves to Searchable Text

This process of converting sound into digital words isn't just a neat trick anymore. It's become a critical tool for professionals in all sorts of fields, saving countless hours and unlocking the valuable information that used to be stuck inside audio recordings.

At the heart of it all is a process called Automatic Speech Recognition (ASR). You can think of ASR as the AI's ears and brain working in perfect sync. It listens intently to sound patterns, breaks them down into the smallest phonetic sounds, and then stitches them together into words and sentences that make sense.

The Growing Demand for Voice Data

The real-world uses for this technology are popping up everywhere, and the market growth reflects that. The global speech-to-text API market was valued at roughly USD 4.42 billion in 2025 and is expected to surge to USD 8.57 billion by 2030. That’s nearly double in just five years, showing just how quickly businesses in media, education, and healthcare are adopting it.

You can see the impact most clearly in highly specialized fields. For instance, doctors and nurses are now using AI to capture patient notes, which frees them up from hours of clunky administrative work. If you're curious about how this works in a real-world medical setting, Simbie AI has a great resource on AI medical transcription.

At its core, audio to text AI is a productivity multiplier. It takes the most natural form of human communication—speech—and makes it as easy to edit, search, and analyze as any written document.

In this guide, we’ll break down exactly how this technology works, what makes it accurate (or inaccurate), and how you can start using it to turn spoken ideas into powerful, actionable text.

How AI Learns to Understand Speech

Think about how a person learns a new language. At first, it's all just a jumble of sounds. Over time, you start to pick out the basic building blocks—the individual sounds, or phonemes—like the "k" sound in "cat" or the "sh" sound in "shoe."

An AI starts its journey in a very similar way. It doesn't actually hear "words." It receives a digital stream of sound waves, and its first job is to break that complex audio down into phonetic components it can recognize. This is the first step in a process called Automatic Speech Recognition (ASR), which is basically the AI's digital ear.

The Two Pillars of Understanding

Now, ASR is great at turning raw sound into a sequence of words, but that's only half the battle. A list of words isn't the same as understanding. For instance, the phrase "I scream for ice cream" sounds almost identical to "ice cream for I scream," but one is a common saying and the other is just nonsense.

This is where the second pillar, Natural Language Processing (NLP), steps in. If ASR is the ears, NLP is the brain. NLP models are trained on massive datasets of text—think books, articles, and countless websites. This is how they learn the rules of grammar, context, and the subtle relationships between words. It’s how the AI knows "ice cream" is a common pairing and understands what a typical sentence should look like.

The infographic below gives you a bird's-eye view of how these two systems work in tandem to turn spoken words into clean, coherent text.

This chart helps visualize the journey from a simple soundwave all the way to structured text, highlighting the different stages of capture, processing, and final output.

From Sounds to Sentences

So what does this look like in practice? When you speak into a device, the ASR model gets to work immediately.

Sound Segmentation: First, the AI chops the audio waveform into tiny, millisecond-long slices. Each one has distinct sound properties.

Phoneme Matching: The model then analyzes these slices and matches them to the most likely phonemes it knows from its vast training data.

Word Assembly: Finally, it starts stringing these phonemes together to form potential words. Here's where it gets tricky. Is the speaker saying "recognize speech" or "wreck a nice beach"? Phonetically, they're nearly identical.

This is where the AI's real intelligence kicks in. The NLP component steps up to evaluate the different word combinations based on pure context.

The AI doesn't just hear sounds; it calculates probabilities. After analyzing billions of sentences, it knows that "recognize speech" is a far more common and logical phrase, making it the most probable transcription.

This predictive power is what enables a modern audio to text AI to navigate homophones, slang, and complex sentences with such surprising accuracy. At every step, the system is making an educated guess, constantly refining its output based on what it knows about real human communication. It's this powerful combination—ASR for listening and NLP for understanding—that turns a chaotic stream of sound into structured, meaningful text.

Why AI Transcription Accuracy Varies

You've probably noticed that the accuracy of an audio to text AI can feel like a moving target. One day it’s flawless, the next it’s full of mistakes. The reason is simple: not all audio is created equal. While the best AI models boast over 95% accuracy, that number assumes you're feeding them pristine, studio-quality sound. In the real world, the AI’s performance is a direct reflection of the audio quality it has to work with.

The core of the issue is a classic signal-to-noise problem. The words you want transcribed are the “signal.” Everything else—the humming air conditioner, the person coughing in the background, multiple people talking at once—is “noise.” The more noise you have, the harder it is for the AI to pick out the signal.

Even things you might not think about can throw the AI for a loop. A speaker standing too far from the mic, using a cheap headset, or talking in a room with a bad echo can all distort the audio just enough to confuse the system’s pattern-matching algorithms.

Key Factors That Influence Accuracy

A handful of specific variables can make or break your transcription quality. Getting a handle on these is the first step to getting cleaner, more reliable results.

Audio Clarity: This is the big one. Clear audio from a good microphone placed close to the speaker will always outperform a recording made on a phone sitting in the middle of a noisy conference room.

Background Noise: Competing sounds are the AI’s kryptonite. A bustling coffee shop, passing sirens, or even quiet music can muddy the phonetic details the AI relies on, causing it to mishear or skip words entirely.

Speaker Overlap: When people talk over each other, their sound waves get mashed together into a confusing mess. Even the smartest AI systems struggle to untangle that jumble into separate, coherent sentences.

Accents and Pacing: Modern AI is trained on a huge variety of speech patterns, but heavy accents, regional slang, or speaking exceptionally fast can still be a challenge. If the speech deviates too much from the patterns the AI knows, mistakes are more likely.

The table below breaks down these common factors, explaining their direct impact and what you can do to manage them.

Factors Impacting Audio to Text AI Accuracy

Factor | Impact on Accuracy | How to Optimize |

|---|---|---|

Audio Quality | Low-quality recordings with distortion or low bitrates are difficult for an AI to interpret. | Use a high-quality microphone and record in a format like WAV or FLAC for best results. |

Background Noise | Competing sounds from traffic, music, or other conversations can obscure the primary speaker's words. | Record in a quiet, controlled environment. Use noise-canceling microphones if possible. |

Speaker Clarity | Mumbling, fast speech, or inconsistent volume levels can lead to transcription errors. | Encourage speakers to enunciate clearly and speak at a moderate, consistent pace. |

Accents & Dialects | Strong, unfamiliar accents may not be well-represented in the AI's training data, causing misinterpretations. | Choose an AI model that specifies training on diverse accents or allows for accent adaptation. |

Specialized Jargon | Technical, medical, or legal terms that aren't in the AI’s general vocabulary will likely be transcribed incorrectly. | Use a transcription service with custom vocabulary features or one specifically trained for your industry. |

Speaker Overlap | When multiple people speak at once, the AI struggles to separate the different voices and words. | Encourage speakers to take turns. Use multi-channel recording to isolate each speaker's audio track. |

As you can see, a little bit of prep work on the front end can make a huge difference in the final transcript.

Dealing With Specialized Terminology

Another major hurdle for a general-purpose AI is industry-specific jargon. An AI trained on everyday conversations isn't going to recognize complex legal terms, scientific names, or company-specific acronyms. Instead, it will just guess the closest phonetic match it knows.

The AI transcribes what it thinks it hears based on probability. If it has never encountered the term "brachiocephalic artery," it might transcribe it as "break you cephalic artery" because those are more common words in its training data.

This is a massive problem in fields like healthcare, where one wrong word can have serious consequences. For these situations, you need an AI that's been specifically trained on that vocabulary. Our guide on speech to text medical transcription dives deeper into how these specialized systems are built for precision.

By understanding these variables, you can start taking practical steps—like using better mics, finding a quiet room, and asking people to speak one at a time—to dramatically improve the accuracy of any transcription tool you use.

Putting Audio to Text AI into Practice

It's one thing to talk about the theory, but the real magic of audio to text AI happens when you see it solving actual problems in the real world. This isn't just a neat trick anymore. Across different fields, it's becoming a fundamental tool that changes how we work with spoken information, driving real productivity and opening up new possibilities.

The proof is in the numbers. The market for AI transcription is already a big deal, sitting at around USD 4.5 billion in 2024. And it's not slowing down. Forecasts predict it will balloon to nearly USD 19.2 billion by 2034. Why? Because industries like healthcare, law, and media are relying on it more and more to get things done faster and more accurately.

Transforming Media and Content Creation

Think about a media company sitting on a mountain of video and audio archives. For years, that content was "dark data"—valuable, but almost impossible to search. If a journalist needed to find a specific quote from hundreds of hours of interviews, they were stuck listening manually, sometimes for days on end.

Now, an audio to text AI can churn through that entire library in a tiny fraction of the time, creating a completely searchable text database. Suddenly, content creators can:

Pinpoint key moments instantly: Find a specific quote or topic in seconds, not hours.

Create subtitles and captions: This makes video content accessible to everyone and gives it a huge SEO boost by making spoken words readable to search engines.

Repurpose content with ease: Pull text highlights from a podcast to quickly create blog posts, social media updates, or promotional snippets.

Revolutionizing Healthcare and Legal Fields

In high-stakes professions, every minute and every detail counts. Doctors and lawyers have always been swamped with documentation, often spending their evenings just typing up notes from the day.

AI dictation completely flips that script. A doctor can now speak patient notes directly into an electronic health record (EHR) between appointments. The AI does the typing, freeing them up to focus on patients and cutting down on burnout.

It’s a similar story for legal teams. They can automatically transcribe hours of depositions or courtroom recordings.

Finding a single, critical phrase buried in a 10-hour testimony used to take a paralegal days of tedious work. Now, it's as simple as a keyword search. This drastically speeds up case preparation and can reveal crucial details that might have otherwise been overlooked.

For anyone who relies on in-depth interviews, our guide on using transcription for research provides some great methods for turning all that audio into data you can actually analyze.

Enhancing Accessibility and Customer Experience

This technology isn't just for internal workflows. Companies are using it to make their services more inclusive and responsive. You can see great examples of AI voice technology in action by learning What Is an AI Receptionist?, which breaks down how these systems work in everyday communication.

Think about it: AI can provide real-time transcripts for customer support calls, giving agents a live script to reference so no detail gets missed. The same technology offers live captioning for webinars and online events, opening them up to people who are deaf or hard of hearing. From media vaults to medical charts, audio to text AI is finally unlocking the value that was trapped in spoken words.

How to Choose the Right AI Transcription Tool

Picking the right audio to text AI tool can feel like a chore, but it doesn't have to be. The secret is to stop looking for the "best" one-size-fits-all solution and start focusing on what you actually need. When you match the tool to your specific workflow, you'll find something that not only gets the job done but actually makes your life easier.

First things first, what are you trying to accomplish? A journalist transcribing a sensitive interview has very different needs than a student recording a lecture. A researcher analyzing focus group data needs features a developer documenting a team meeting might not. Pinpointing your main goal is the single most important step.

Assess Core Features and Accuracy

With your goal in mind, you can start digging into the details. Let's be honest—not all transcription tools are created equal. Some are built for lightning-fast turnarounds, while others are fine-tuned to pull clear dialogue out of a noisy background. Your job is to find the features that solve your biggest headaches.

Here are a few things I always tell people to look for:

Speaker Identification: Can the tool tell who is speaking and when? If you're dealing with interviews, meetings, or panel discussions, this is an absolute must-have.

Custom Vocabulary: This one is a game-changer. If you work in a specialized field like medicine, law, or engineering, the ability to teach the AI specific names, acronyms, and jargon will drastically boost its accuracy.

Timestamping: Does the AI mark when words are spoken? Word-level timestamps are a lifesaver for video editors and podcasters who need to sync text perfectly with the audio.

Integration Capabilities: How easily does this tool slide into your current setup? Check for direct connections to the apps you already use, like Zoom, Google Drive, or whatever is central to your work.

Choosing a tool is like hiring an assistant. You wouldn't hire a generalist for a highly specialized task. Match the AI’s skills—like custom vocabulary or speaker diarization—to the specific job you need it to do.

Compare Pricing Models and Scalability

Finally, let's talk about money. Most AI transcription services use one of a few common pricing models. A pay-as-you-go plan is great if you only need transcriptions here and there. For more consistent work, a monthly subscription almost always offers a better deal. Others charge a flat rate per minute or hour, which keeps your billing predictable.

Always check the details. Some plans might look great but have hidden caps on transcription hours or lock advanced features behind a higher paywall. For a really thorough comparison of what's out there, a good guide on the best AI transcription software can save you a ton of research time. Ultimately, you want a tool that not only fits your budget today but can also grow with you down the road.

The Future of AI Voice Technology

The ability of an audio to text AI to turn our spoken words into text is really just the beginning. The truly exciting part is what comes next. These systems are quickly evolving from digital stenographers into something far more sophisticated—tools that can grasp the meaning, intent, and even the emotion behind what we say.

This shift takes voice technology beyond simple transcription and into the realm of genuine comprehension. Think about a future where an AI doesn't just hear your words but understands how you're saying them. That’s where this is all headed, with emerging capabilities designed to analyze tone, sentiment, and other emotional cues.

Moving Beyond Words to Meaning

The next generation of voice AI is set to unlock a much richer layer of communication data. For example, instead of getting just a flat text log of a customer service call, a business could receive an instant analysis of that customer's satisfaction level, all based on the subtle shifts in their tone of voice.

This opens up a whole new world of smarter, more empathetic applications.

Real-Time Language Translation: Imagine having a totally natural conversation with someone who speaks a different language, with an AI translating for both of you instantly, right in the middle of a live call.

Sentiment Analysis: New tools could automatically flag frustration or delight in a customer's voice, giving support teams the cues they need to respond more effectively before a situation escalates.

Emotional Intelligence: Your virtual assistant might one day recognize from your voice that you’re stressed or tired and adjust its responses to be more helpful and supportive.

The goal is to create systems that can engage in more human-like conversations. It's about shifting from simply processing words to interpreting intent, emotion, and context.

This leap forward is fueling explosive market growth. The audio AI recognition market is on track to jump from USD 5.23 billion in 2024 to a staggering USD 19.63 billion by 2033. According to these market trends in audio AI, this growth is largely driven by these advanced new capabilities. As these tools get better at understanding us, they'll show up everywhere—from creating hyper-personalized user experiences to powering security systems that recognize your unique voiceprint.

Let's Tackle Some Common Questions About Audio-to-Text AI

When you're new to the world of AI transcription, a few questions almost always pop up. People are naturally curious about how well it actually works, what it can handle, and—perhaps most importantly—whether their data is safe. Let's break those down.

The big one is always accuracy. You'll often see claims of over 95% accuracy, and while that's achievable, it's really a best-case scenario. Think crystal-clear audio, a single speaker with a standard accent, and zero background noise. The real world is much messier, and things like thick accents, industry-specific jargon, or people talking over each other will inevitably lower that number.

What About Different Languages and Data Security?

"Can it understand my specific dialect or a different language?" That's another frequent question, and the answer is a resounding yes. The best tools are trained on an enormous amount of diverse audio data from all over the world. This allows them to get surprisingly good at recognizing a huge range of languages and accents. Some services even let you give the AI a heads-up on the language, which helps it nail the transcription.

Finally, let's talk about the elephant in the room: what happens to your audio files?

Any trustworthy audio-to-text provider will make security a top priority. This means your data is protected with strong encryption, both when you're uploading it and while it's stored on their servers. While most services comply with major privacy laws like GDPR, it’s always a smart move to quickly scan their privacy policy before you upload anything sensitive.

Getting a handle on these key points—the reality of accuracy, language support, and security protocols—is the best way to find a tool that genuinely fits what you need to do.

Ready to turn your spoken ideas into polished text in seconds? VoiceType AI helps you write up to nine times faster inside any application with 99.7% accuracy. Try it for free and see how much time you can save.

Think of a digital stenographer that listens to any conversation, meeting, or voice note and instantly types out every single word. That's the essence of audio to text AI — a technology that turns spoken language into written, searchable text. It's the magic behind your favorite voice assistants and the engine that automatically generates your meeting summaries.

From Sound Waves to Searchable Text

This process of converting sound into digital words isn't just a neat trick anymore. It's become a critical tool for professionals in all sorts of fields, saving countless hours and unlocking the valuable information that used to be stuck inside audio recordings.

At the heart of it all is a process called Automatic Speech Recognition (ASR). You can think of ASR as the AI's ears and brain working in perfect sync. It listens intently to sound patterns, breaks them down into the smallest phonetic sounds, and then stitches them together into words and sentences that make sense.

The Growing Demand for Voice Data

The real-world uses for this technology are popping up everywhere, and the market growth reflects that. The global speech-to-text API market was valued at roughly USD 4.42 billion in 2025 and is expected to surge to USD 8.57 billion by 2030. That’s nearly double in just five years, showing just how quickly businesses in media, education, and healthcare are adopting it.

You can see the impact most clearly in highly specialized fields. For instance, doctors and nurses are now using AI to capture patient notes, which frees them up from hours of clunky administrative work. If you're curious about how this works in a real-world medical setting, Simbie AI has a great resource on AI medical transcription.

At its core, audio to text AI is a productivity multiplier. It takes the most natural form of human communication—speech—and makes it as easy to edit, search, and analyze as any written document.

In this guide, we’ll break down exactly how this technology works, what makes it accurate (or inaccurate), and how you can start using it to turn spoken ideas into powerful, actionable text.

How AI Learns to Understand Speech

Think about how a person learns a new language. At first, it's all just a jumble of sounds. Over time, you start to pick out the basic building blocks—the individual sounds, or phonemes—like the "k" sound in "cat" or the "sh" sound in "shoe."

An AI starts its journey in a very similar way. It doesn't actually hear "words." It receives a digital stream of sound waves, and its first job is to break that complex audio down into phonetic components it can recognize. This is the first step in a process called Automatic Speech Recognition (ASR), which is basically the AI's digital ear.

The Two Pillars of Understanding

Now, ASR is great at turning raw sound into a sequence of words, but that's only half the battle. A list of words isn't the same as understanding. For instance, the phrase "I scream for ice cream" sounds almost identical to "ice cream for I scream," but one is a common saying and the other is just nonsense.

This is where the second pillar, Natural Language Processing (NLP), steps in. If ASR is the ears, NLP is the brain. NLP models are trained on massive datasets of text—think books, articles, and countless websites. This is how they learn the rules of grammar, context, and the subtle relationships between words. It’s how the AI knows "ice cream" is a common pairing and understands what a typical sentence should look like.

The infographic below gives you a bird's-eye view of how these two systems work in tandem to turn spoken words into clean, coherent text.

This chart helps visualize the journey from a simple soundwave all the way to structured text, highlighting the different stages of capture, processing, and final output.

From Sounds to Sentences

So what does this look like in practice? When you speak into a device, the ASR model gets to work immediately.

Sound Segmentation: First, the AI chops the audio waveform into tiny, millisecond-long slices. Each one has distinct sound properties.

Phoneme Matching: The model then analyzes these slices and matches them to the most likely phonemes it knows from its vast training data.

Word Assembly: Finally, it starts stringing these phonemes together to form potential words. Here's where it gets tricky. Is the speaker saying "recognize speech" or "wreck a nice beach"? Phonetically, they're nearly identical.

This is where the AI's real intelligence kicks in. The NLP component steps up to evaluate the different word combinations based on pure context.

The AI doesn't just hear sounds; it calculates probabilities. After analyzing billions of sentences, it knows that "recognize speech" is a far more common and logical phrase, making it the most probable transcription.

This predictive power is what enables a modern audio to text AI to navigate homophones, slang, and complex sentences with such surprising accuracy. At every step, the system is making an educated guess, constantly refining its output based on what it knows about real human communication. It's this powerful combination—ASR for listening and NLP for understanding—that turns a chaotic stream of sound into structured, meaningful text.

Why AI Transcription Accuracy Varies

You've probably noticed that the accuracy of an audio to text AI can feel like a moving target. One day it’s flawless, the next it’s full of mistakes. The reason is simple: not all audio is created equal. While the best AI models boast over 95% accuracy, that number assumes you're feeding them pristine, studio-quality sound. In the real world, the AI’s performance is a direct reflection of the audio quality it has to work with.

The core of the issue is a classic signal-to-noise problem. The words you want transcribed are the “signal.” Everything else—the humming air conditioner, the person coughing in the background, multiple people talking at once—is “noise.” The more noise you have, the harder it is for the AI to pick out the signal.

Even things you might not think about can throw the AI for a loop. A speaker standing too far from the mic, using a cheap headset, or talking in a room with a bad echo can all distort the audio just enough to confuse the system’s pattern-matching algorithms.

Key Factors That Influence Accuracy

A handful of specific variables can make or break your transcription quality. Getting a handle on these is the first step to getting cleaner, more reliable results.

Audio Clarity: This is the big one. Clear audio from a good microphone placed close to the speaker will always outperform a recording made on a phone sitting in the middle of a noisy conference room.

Background Noise: Competing sounds are the AI’s kryptonite. A bustling coffee shop, passing sirens, or even quiet music can muddy the phonetic details the AI relies on, causing it to mishear or skip words entirely.

Speaker Overlap: When people talk over each other, their sound waves get mashed together into a confusing mess. Even the smartest AI systems struggle to untangle that jumble into separate, coherent sentences.

Accents and Pacing: Modern AI is trained on a huge variety of speech patterns, but heavy accents, regional slang, or speaking exceptionally fast can still be a challenge. If the speech deviates too much from the patterns the AI knows, mistakes are more likely.

The table below breaks down these common factors, explaining their direct impact and what you can do to manage them.

Factors Impacting Audio to Text AI Accuracy

Factor | Impact on Accuracy | How to Optimize |

|---|---|---|

Audio Quality | Low-quality recordings with distortion or low bitrates are difficult for an AI to interpret. | Use a high-quality microphone and record in a format like WAV or FLAC for best results. |

Background Noise | Competing sounds from traffic, music, or other conversations can obscure the primary speaker's words. | Record in a quiet, controlled environment. Use noise-canceling microphones if possible. |

Speaker Clarity | Mumbling, fast speech, or inconsistent volume levels can lead to transcription errors. | Encourage speakers to enunciate clearly and speak at a moderate, consistent pace. |

Accents & Dialects | Strong, unfamiliar accents may not be well-represented in the AI's training data, causing misinterpretations. | Choose an AI model that specifies training on diverse accents or allows for accent adaptation. |

Specialized Jargon | Technical, medical, or legal terms that aren't in the AI’s general vocabulary will likely be transcribed incorrectly. | Use a transcription service with custom vocabulary features or one specifically trained for your industry. |

Speaker Overlap | When multiple people speak at once, the AI struggles to separate the different voices and words. | Encourage speakers to take turns. Use multi-channel recording to isolate each speaker's audio track. |

As you can see, a little bit of prep work on the front end can make a huge difference in the final transcript.

Dealing With Specialized Terminology

Another major hurdle for a general-purpose AI is industry-specific jargon. An AI trained on everyday conversations isn't going to recognize complex legal terms, scientific names, or company-specific acronyms. Instead, it will just guess the closest phonetic match it knows.

The AI transcribes what it thinks it hears based on probability. If it has never encountered the term "brachiocephalic artery," it might transcribe it as "break you cephalic artery" because those are more common words in its training data.

This is a massive problem in fields like healthcare, where one wrong word can have serious consequences. For these situations, you need an AI that's been specifically trained on that vocabulary. Our guide on speech to text medical transcription dives deeper into how these specialized systems are built for precision.

By understanding these variables, you can start taking practical steps—like using better mics, finding a quiet room, and asking people to speak one at a time—to dramatically improve the accuracy of any transcription tool you use.

Putting Audio to Text AI into Practice

It's one thing to talk about the theory, but the real magic of audio to text AI happens when you see it solving actual problems in the real world. This isn't just a neat trick anymore. Across different fields, it's becoming a fundamental tool that changes how we work with spoken information, driving real productivity and opening up new possibilities.

The proof is in the numbers. The market for AI transcription is already a big deal, sitting at around USD 4.5 billion in 2024. And it's not slowing down. Forecasts predict it will balloon to nearly USD 19.2 billion by 2034. Why? Because industries like healthcare, law, and media are relying on it more and more to get things done faster and more accurately.

Transforming Media and Content Creation

Think about a media company sitting on a mountain of video and audio archives. For years, that content was "dark data"—valuable, but almost impossible to search. If a journalist needed to find a specific quote from hundreds of hours of interviews, they were stuck listening manually, sometimes for days on end.

Now, an audio to text AI can churn through that entire library in a tiny fraction of the time, creating a completely searchable text database. Suddenly, content creators can:

Pinpoint key moments instantly: Find a specific quote or topic in seconds, not hours.

Create subtitles and captions: This makes video content accessible to everyone and gives it a huge SEO boost by making spoken words readable to search engines.

Repurpose content with ease: Pull text highlights from a podcast to quickly create blog posts, social media updates, or promotional snippets.

Revolutionizing Healthcare and Legal Fields

In high-stakes professions, every minute and every detail counts. Doctors and lawyers have always been swamped with documentation, often spending their evenings just typing up notes from the day.

AI dictation completely flips that script. A doctor can now speak patient notes directly into an electronic health record (EHR) between appointments. The AI does the typing, freeing them up to focus on patients and cutting down on burnout.

It’s a similar story for legal teams. They can automatically transcribe hours of depositions or courtroom recordings.

Finding a single, critical phrase buried in a 10-hour testimony used to take a paralegal days of tedious work. Now, it's as simple as a keyword search. This drastically speeds up case preparation and can reveal crucial details that might have otherwise been overlooked.

For anyone who relies on in-depth interviews, our guide on using transcription for research provides some great methods for turning all that audio into data you can actually analyze.

Enhancing Accessibility and Customer Experience

This technology isn't just for internal workflows. Companies are using it to make their services more inclusive and responsive. You can see great examples of AI voice technology in action by learning What Is an AI Receptionist?, which breaks down how these systems work in everyday communication.

Think about it: AI can provide real-time transcripts for customer support calls, giving agents a live script to reference so no detail gets missed. The same technology offers live captioning for webinars and online events, opening them up to people who are deaf or hard of hearing. From media vaults to medical charts, audio to text AI is finally unlocking the value that was trapped in spoken words.

How to Choose the Right AI Transcription Tool

Picking the right audio to text AI tool can feel like a chore, but it doesn't have to be. The secret is to stop looking for the "best" one-size-fits-all solution and start focusing on what you actually need. When you match the tool to your specific workflow, you'll find something that not only gets the job done but actually makes your life easier.

First things first, what are you trying to accomplish? A journalist transcribing a sensitive interview has very different needs than a student recording a lecture. A researcher analyzing focus group data needs features a developer documenting a team meeting might not. Pinpointing your main goal is the single most important step.

Assess Core Features and Accuracy

With your goal in mind, you can start digging into the details. Let's be honest—not all transcription tools are created equal. Some are built for lightning-fast turnarounds, while others are fine-tuned to pull clear dialogue out of a noisy background. Your job is to find the features that solve your biggest headaches.

Here are a few things I always tell people to look for:

Speaker Identification: Can the tool tell who is speaking and when? If you're dealing with interviews, meetings, or panel discussions, this is an absolute must-have.

Custom Vocabulary: This one is a game-changer. If you work in a specialized field like medicine, law, or engineering, the ability to teach the AI specific names, acronyms, and jargon will drastically boost its accuracy.

Timestamping: Does the AI mark when words are spoken? Word-level timestamps are a lifesaver for video editors and podcasters who need to sync text perfectly with the audio.

Integration Capabilities: How easily does this tool slide into your current setup? Check for direct connections to the apps you already use, like Zoom, Google Drive, or whatever is central to your work.

Choosing a tool is like hiring an assistant. You wouldn't hire a generalist for a highly specialized task. Match the AI’s skills—like custom vocabulary or speaker diarization—to the specific job you need it to do.

Compare Pricing Models and Scalability

Finally, let's talk about money. Most AI transcription services use one of a few common pricing models. A pay-as-you-go plan is great if you only need transcriptions here and there. For more consistent work, a monthly subscription almost always offers a better deal. Others charge a flat rate per minute or hour, which keeps your billing predictable.

Always check the details. Some plans might look great but have hidden caps on transcription hours or lock advanced features behind a higher paywall. For a really thorough comparison of what's out there, a good guide on the best AI transcription software can save you a ton of research time. Ultimately, you want a tool that not only fits your budget today but can also grow with you down the road.

The Future of AI Voice Technology

The ability of an audio to text AI to turn our spoken words into text is really just the beginning. The truly exciting part is what comes next. These systems are quickly evolving from digital stenographers into something far more sophisticated—tools that can grasp the meaning, intent, and even the emotion behind what we say.

This shift takes voice technology beyond simple transcription and into the realm of genuine comprehension. Think about a future where an AI doesn't just hear your words but understands how you're saying them. That’s where this is all headed, with emerging capabilities designed to analyze tone, sentiment, and other emotional cues.

Moving Beyond Words to Meaning

The next generation of voice AI is set to unlock a much richer layer of communication data. For example, instead of getting just a flat text log of a customer service call, a business could receive an instant analysis of that customer's satisfaction level, all based on the subtle shifts in their tone of voice.

This opens up a whole new world of smarter, more empathetic applications.

Real-Time Language Translation: Imagine having a totally natural conversation with someone who speaks a different language, with an AI translating for both of you instantly, right in the middle of a live call.

Sentiment Analysis: New tools could automatically flag frustration or delight in a customer's voice, giving support teams the cues they need to respond more effectively before a situation escalates.

Emotional Intelligence: Your virtual assistant might one day recognize from your voice that you’re stressed or tired and adjust its responses to be more helpful and supportive.

The goal is to create systems that can engage in more human-like conversations. It's about shifting from simply processing words to interpreting intent, emotion, and context.

This leap forward is fueling explosive market growth. The audio AI recognition market is on track to jump from USD 5.23 billion in 2024 to a staggering USD 19.63 billion by 2033. According to these market trends in audio AI, this growth is largely driven by these advanced new capabilities. As these tools get better at understanding us, they'll show up everywhere—from creating hyper-personalized user experiences to powering security systems that recognize your unique voiceprint.

Let's Tackle Some Common Questions About Audio-to-Text AI

When you're new to the world of AI transcription, a few questions almost always pop up. People are naturally curious about how well it actually works, what it can handle, and—perhaps most importantly—whether their data is safe. Let's break those down.

The big one is always accuracy. You'll often see claims of over 95% accuracy, and while that's achievable, it's really a best-case scenario. Think crystal-clear audio, a single speaker with a standard accent, and zero background noise. The real world is much messier, and things like thick accents, industry-specific jargon, or people talking over each other will inevitably lower that number.

What About Different Languages and Data Security?

"Can it understand my specific dialect or a different language?" That's another frequent question, and the answer is a resounding yes. The best tools are trained on an enormous amount of diverse audio data from all over the world. This allows them to get surprisingly good at recognizing a huge range of languages and accents. Some services even let you give the AI a heads-up on the language, which helps it nail the transcription.

Finally, let's talk about the elephant in the room: what happens to your audio files?

Any trustworthy audio-to-text provider will make security a top priority. This means your data is protected with strong encryption, both when you're uploading it and while it's stored on their servers. While most services comply with major privacy laws like GDPR, it’s always a smart move to quickly scan their privacy policy before you upload anything sensitive.

Getting a handle on these key points—the reality of accuracy, language support, and security protocols—is the best way to find a tool that genuinely fits what you need to do.

Ready to turn your spoken ideas into polished text in seconds? VoiceType AI helps you write up to nine times faster inside any application with 99.7% accuracy. Try it for free and see how much time you can save.

Think of a digital stenographer that listens to any conversation, meeting, or voice note and instantly types out every single word. That's the essence of audio to text AI — a technology that turns spoken language into written, searchable text. It's the magic behind your favorite voice assistants and the engine that automatically generates your meeting summaries.

From Sound Waves to Searchable Text

This process of converting sound into digital words isn't just a neat trick anymore. It's become a critical tool for professionals in all sorts of fields, saving countless hours and unlocking the valuable information that used to be stuck inside audio recordings.

At the heart of it all is a process called Automatic Speech Recognition (ASR). You can think of ASR as the AI's ears and brain working in perfect sync. It listens intently to sound patterns, breaks them down into the smallest phonetic sounds, and then stitches them together into words and sentences that make sense.

The Growing Demand for Voice Data

The real-world uses for this technology are popping up everywhere, and the market growth reflects that. The global speech-to-text API market was valued at roughly USD 4.42 billion in 2025 and is expected to surge to USD 8.57 billion by 2030. That’s nearly double in just five years, showing just how quickly businesses in media, education, and healthcare are adopting it.

You can see the impact most clearly in highly specialized fields. For instance, doctors and nurses are now using AI to capture patient notes, which frees them up from hours of clunky administrative work. If you're curious about how this works in a real-world medical setting, Simbie AI has a great resource on AI medical transcription.

At its core, audio to text AI is a productivity multiplier. It takes the most natural form of human communication—speech—and makes it as easy to edit, search, and analyze as any written document.

In this guide, we’ll break down exactly how this technology works, what makes it accurate (or inaccurate), and how you can start using it to turn spoken ideas into powerful, actionable text.

How AI Learns to Understand Speech

Think about how a person learns a new language. At first, it's all just a jumble of sounds. Over time, you start to pick out the basic building blocks—the individual sounds, or phonemes—like the "k" sound in "cat" or the "sh" sound in "shoe."

An AI starts its journey in a very similar way. It doesn't actually hear "words." It receives a digital stream of sound waves, and its first job is to break that complex audio down into phonetic components it can recognize. This is the first step in a process called Automatic Speech Recognition (ASR), which is basically the AI's digital ear.

The Two Pillars of Understanding

Now, ASR is great at turning raw sound into a sequence of words, but that's only half the battle. A list of words isn't the same as understanding. For instance, the phrase "I scream for ice cream" sounds almost identical to "ice cream for I scream," but one is a common saying and the other is just nonsense.

This is where the second pillar, Natural Language Processing (NLP), steps in. If ASR is the ears, NLP is the brain. NLP models are trained on massive datasets of text—think books, articles, and countless websites. This is how they learn the rules of grammar, context, and the subtle relationships between words. It’s how the AI knows "ice cream" is a common pairing and understands what a typical sentence should look like.

The infographic below gives you a bird's-eye view of how these two systems work in tandem to turn spoken words into clean, coherent text.

This chart helps visualize the journey from a simple soundwave all the way to structured text, highlighting the different stages of capture, processing, and final output.

From Sounds to Sentences

So what does this look like in practice? When you speak into a device, the ASR model gets to work immediately.

Sound Segmentation: First, the AI chops the audio waveform into tiny, millisecond-long slices. Each one has distinct sound properties.

Phoneme Matching: The model then analyzes these slices and matches them to the most likely phonemes it knows from its vast training data.

Word Assembly: Finally, it starts stringing these phonemes together to form potential words. Here's where it gets tricky. Is the speaker saying "recognize speech" or "wreck a nice beach"? Phonetically, they're nearly identical.

This is where the AI's real intelligence kicks in. The NLP component steps up to evaluate the different word combinations based on pure context.

The AI doesn't just hear sounds; it calculates probabilities. After analyzing billions of sentences, it knows that "recognize speech" is a far more common and logical phrase, making it the most probable transcription.

This predictive power is what enables a modern audio to text AI to navigate homophones, slang, and complex sentences with such surprising accuracy. At every step, the system is making an educated guess, constantly refining its output based on what it knows about real human communication. It's this powerful combination—ASR for listening and NLP for understanding—that turns a chaotic stream of sound into structured, meaningful text.

Why AI Transcription Accuracy Varies

You've probably noticed that the accuracy of an audio to text AI can feel like a moving target. One day it’s flawless, the next it’s full of mistakes. The reason is simple: not all audio is created equal. While the best AI models boast over 95% accuracy, that number assumes you're feeding them pristine, studio-quality sound. In the real world, the AI’s performance is a direct reflection of the audio quality it has to work with.

The core of the issue is a classic signal-to-noise problem. The words you want transcribed are the “signal.” Everything else—the humming air conditioner, the person coughing in the background, multiple people talking at once—is “noise.” The more noise you have, the harder it is for the AI to pick out the signal.

Even things you might not think about can throw the AI for a loop. A speaker standing too far from the mic, using a cheap headset, or talking in a room with a bad echo can all distort the audio just enough to confuse the system’s pattern-matching algorithms.

Key Factors That Influence Accuracy

A handful of specific variables can make or break your transcription quality. Getting a handle on these is the first step to getting cleaner, more reliable results.

Audio Clarity: This is the big one. Clear audio from a good microphone placed close to the speaker will always outperform a recording made on a phone sitting in the middle of a noisy conference room.

Background Noise: Competing sounds are the AI’s kryptonite. A bustling coffee shop, passing sirens, or even quiet music can muddy the phonetic details the AI relies on, causing it to mishear or skip words entirely.

Speaker Overlap: When people talk over each other, their sound waves get mashed together into a confusing mess. Even the smartest AI systems struggle to untangle that jumble into separate, coherent sentences.

Accents and Pacing: Modern AI is trained on a huge variety of speech patterns, but heavy accents, regional slang, or speaking exceptionally fast can still be a challenge. If the speech deviates too much from the patterns the AI knows, mistakes are more likely.

The table below breaks down these common factors, explaining their direct impact and what you can do to manage them.

Factors Impacting Audio to Text AI Accuracy

Factor | Impact on Accuracy | How to Optimize |

|---|---|---|

Audio Quality | Low-quality recordings with distortion or low bitrates are difficult for an AI to interpret. | Use a high-quality microphone and record in a format like WAV or FLAC for best results. |

Background Noise | Competing sounds from traffic, music, or other conversations can obscure the primary speaker's words. | Record in a quiet, controlled environment. Use noise-canceling microphones if possible. |

Speaker Clarity | Mumbling, fast speech, or inconsistent volume levels can lead to transcription errors. | Encourage speakers to enunciate clearly and speak at a moderate, consistent pace. |

Accents & Dialects | Strong, unfamiliar accents may not be well-represented in the AI's training data, causing misinterpretations. | Choose an AI model that specifies training on diverse accents or allows for accent adaptation. |

Specialized Jargon | Technical, medical, or legal terms that aren't in the AI’s general vocabulary will likely be transcribed incorrectly. | Use a transcription service with custom vocabulary features or one specifically trained for your industry. |

Speaker Overlap | When multiple people speak at once, the AI struggles to separate the different voices and words. | Encourage speakers to take turns. Use multi-channel recording to isolate each speaker's audio track. |

As you can see, a little bit of prep work on the front end can make a huge difference in the final transcript.

Dealing With Specialized Terminology

Another major hurdle for a general-purpose AI is industry-specific jargon. An AI trained on everyday conversations isn't going to recognize complex legal terms, scientific names, or company-specific acronyms. Instead, it will just guess the closest phonetic match it knows.

The AI transcribes what it thinks it hears based on probability. If it has never encountered the term "brachiocephalic artery," it might transcribe it as "break you cephalic artery" because those are more common words in its training data.

This is a massive problem in fields like healthcare, where one wrong word can have serious consequences. For these situations, you need an AI that's been specifically trained on that vocabulary. Our guide on speech to text medical transcription dives deeper into how these specialized systems are built for precision.

By understanding these variables, you can start taking practical steps—like using better mics, finding a quiet room, and asking people to speak one at a time—to dramatically improve the accuracy of any transcription tool you use.

Putting Audio to Text AI into Practice

It's one thing to talk about the theory, but the real magic of audio to text AI happens when you see it solving actual problems in the real world. This isn't just a neat trick anymore. Across different fields, it's becoming a fundamental tool that changes how we work with spoken information, driving real productivity and opening up new possibilities.

The proof is in the numbers. The market for AI transcription is already a big deal, sitting at around USD 4.5 billion in 2024. And it's not slowing down. Forecasts predict it will balloon to nearly USD 19.2 billion by 2034. Why? Because industries like healthcare, law, and media are relying on it more and more to get things done faster and more accurately.

Transforming Media and Content Creation

Think about a media company sitting on a mountain of video and audio archives. For years, that content was "dark data"—valuable, but almost impossible to search. If a journalist needed to find a specific quote from hundreds of hours of interviews, they were stuck listening manually, sometimes for days on end.

Now, an audio to text AI can churn through that entire library in a tiny fraction of the time, creating a completely searchable text database. Suddenly, content creators can:

Pinpoint key moments instantly: Find a specific quote or topic in seconds, not hours.

Create subtitles and captions: This makes video content accessible to everyone and gives it a huge SEO boost by making spoken words readable to search engines.

Repurpose content with ease: Pull text highlights from a podcast to quickly create blog posts, social media updates, or promotional snippets.

Revolutionizing Healthcare and Legal Fields

In high-stakes professions, every minute and every detail counts. Doctors and lawyers have always been swamped with documentation, often spending their evenings just typing up notes from the day.

AI dictation completely flips that script. A doctor can now speak patient notes directly into an electronic health record (EHR) between appointments. The AI does the typing, freeing them up to focus on patients and cutting down on burnout.

It’s a similar story for legal teams. They can automatically transcribe hours of depositions or courtroom recordings.

Finding a single, critical phrase buried in a 10-hour testimony used to take a paralegal days of tedious work. Now, it's as simple as a keyword search. This drastically speeds up case preparation and can reveal crucial details that might have otherwise been overlooked.

For anyone who relies on in-depth interviews, our guide on using transcription for research provides some great methods for turning all that audio into data you can actually analyze.

Enhancing Accessibility and Customer Experience

This technology isn't just for internal workflows. Companies are using it to make their services more inclusive and responsive. You can see great examples of AI voice technology in action by learning What Is an AI Receptionist?, which breaks down how these systems work in everyday communication.

Think about it: AI can provide real-time transcripts for customer support calls, giving agents a live script to reference so no detail gets missed. The same technology offers live captioning for webinars and online events, opening them up to people who are deaf or hard of hearing. From media vaults to medical charts, audio to text AI is finally unlocking the value that was trapped in spoken words.

How to Choose the Right AI Transcription Tool

Picking the right audio to text AI tool can feel like a chore, but it doesn't have to be. The secret is to stop looking for the "best" one-size-fits-all solution and start focusing on what you actually need. When you match the tool to your specific workflow, you'll find something that not only gets the job done but actually makes your life easier.

First things first, what are you trying to accomplish? A journalist transcribing a sensitive interview has very different needs than a student recording a lecture. A researcher analyzing focus group data needs features a developer documenting a team meeting might not. Pinpointing your main goal is the single most important step.

Assess Core Features and Accuracy

With your goal in mind, you can start digging into the details. Let's be honest—not all transcription tools are created equal. Some are built for lightning-fast turnarounds, while others are fine-tuned to pull clear dialogue out of a noisy background. Your job is to find the features that solve your biggest headaches.

Here are a few things I always tell people to look for:

Speaker Identification: Can the tool tell who is speaking and when? If you're dealing with interviews, meetings, or panel discussions, this is an absolute must-have.

Custom Vocabulary: This one is a game-changer. If you work in a specialized field like medicine, law, or engineering, the ability to teach the AI specific names, acronyms, and jargon will drastically boost its accuracy.