Content

Voice Recognition Software for Healthcare | Improve Clinical Efficiency

Voice Recognition Software for Healthcare | Improve Clinical Efficiency

September 30, 2025

At its core, voice recognition software for healthcare is a technology that lets clinicians turn their spoken words into text that flows directly into an electronic health record (EHR). It’s essentially a digital scribe that captures everything from clinical notes and patient histories to diagnoses in real time, freeing providers from the endless cycle of manual typing.

Ending the Burden of Clinical Paperwork

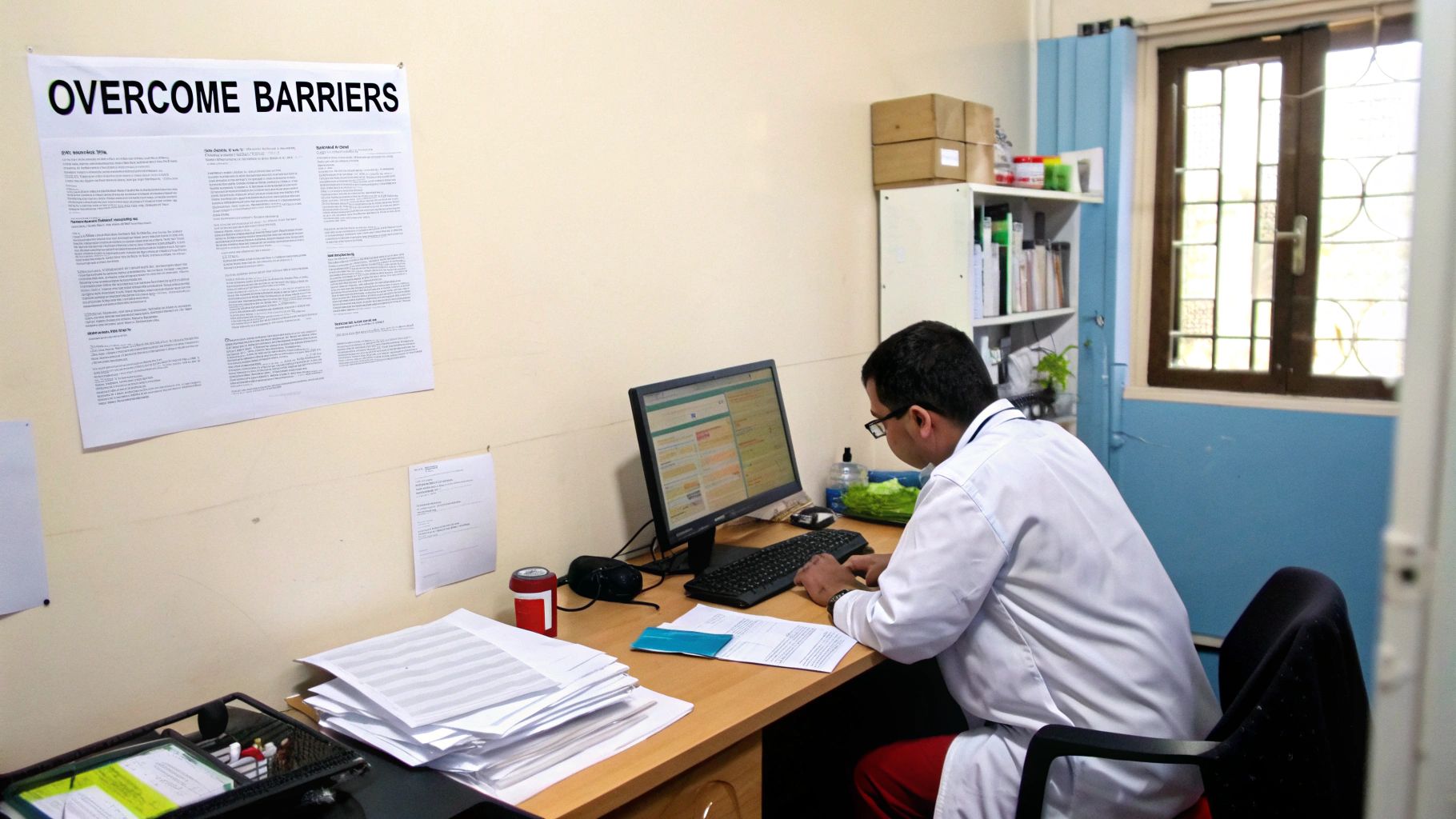

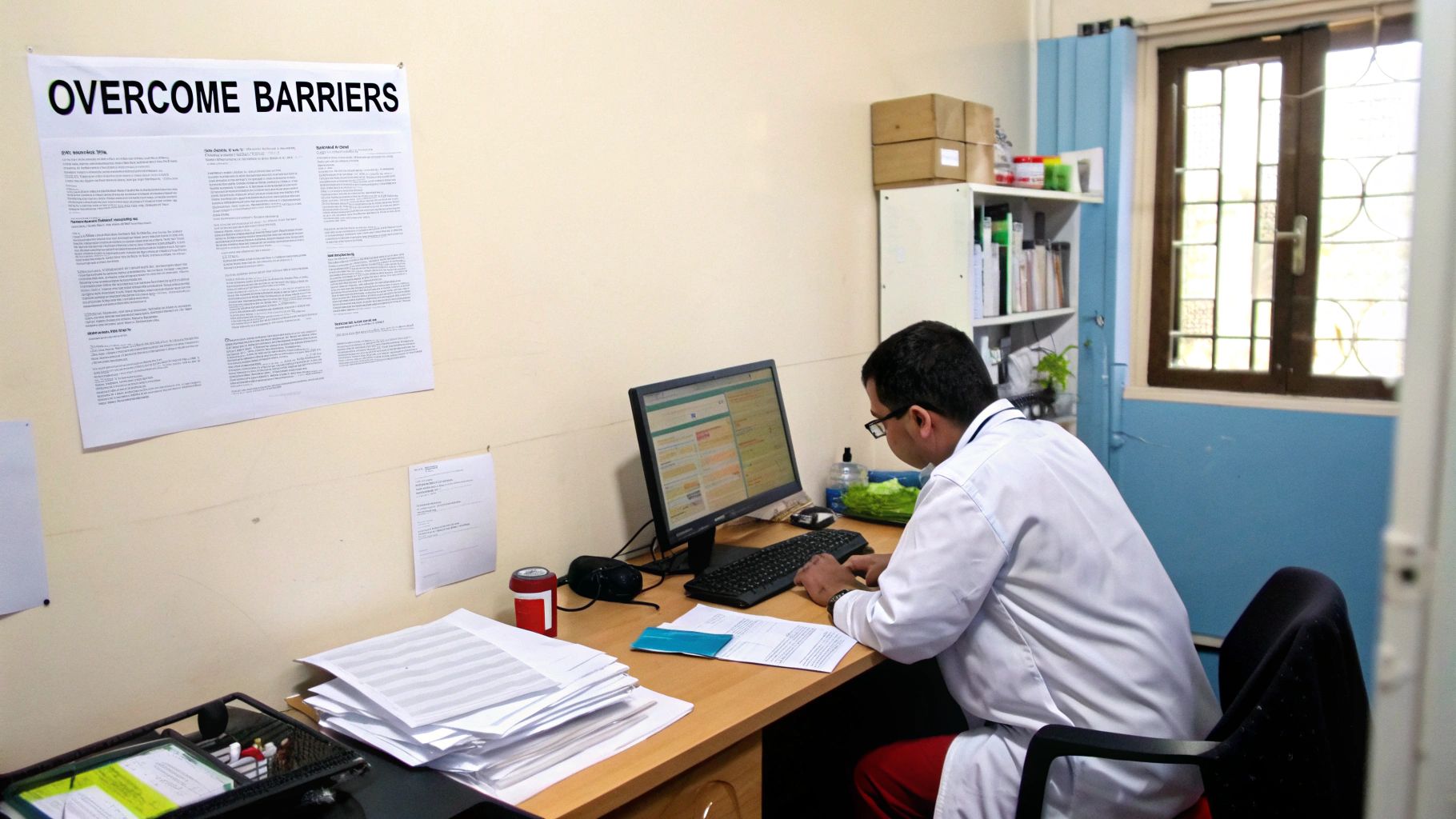

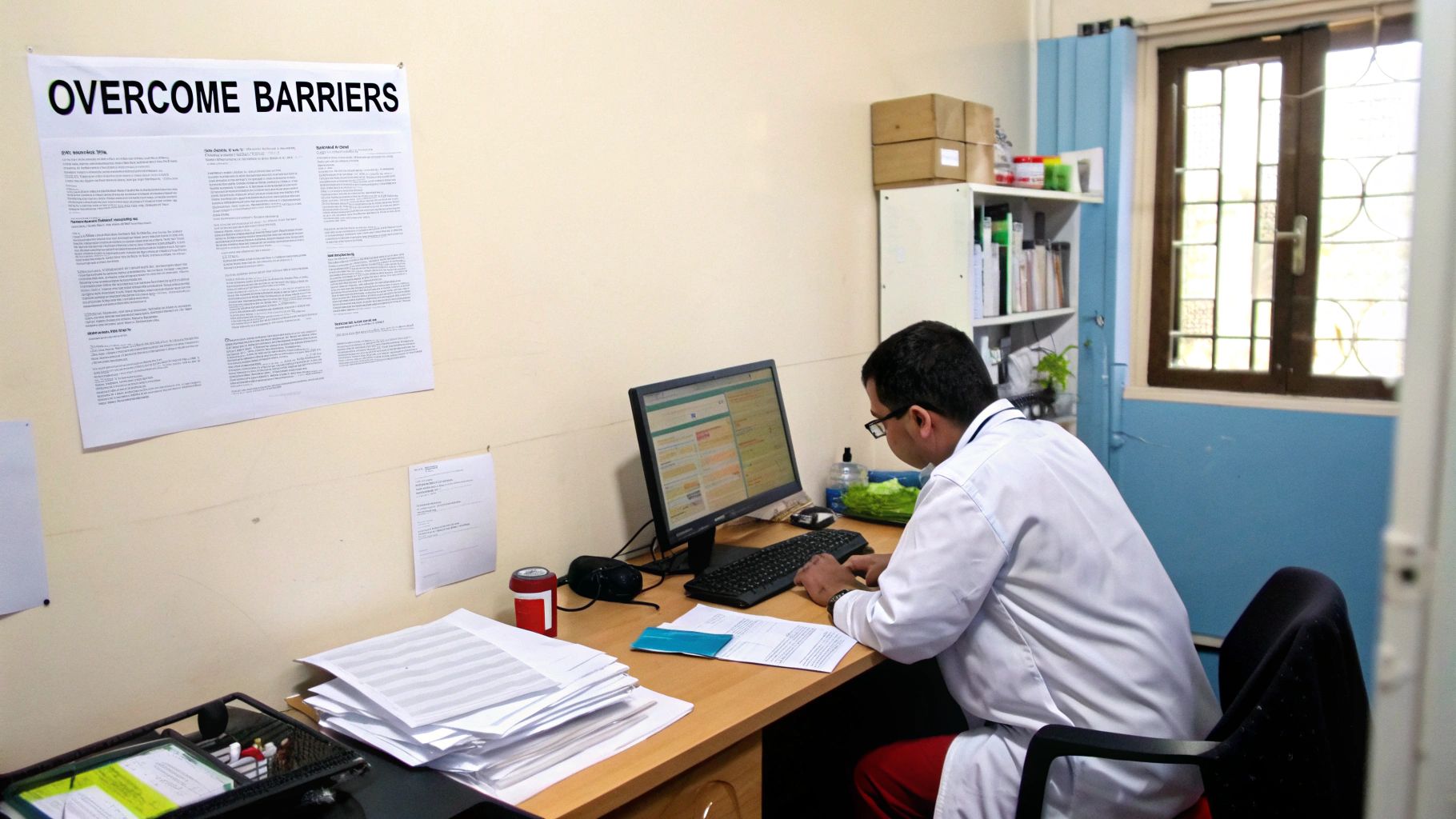

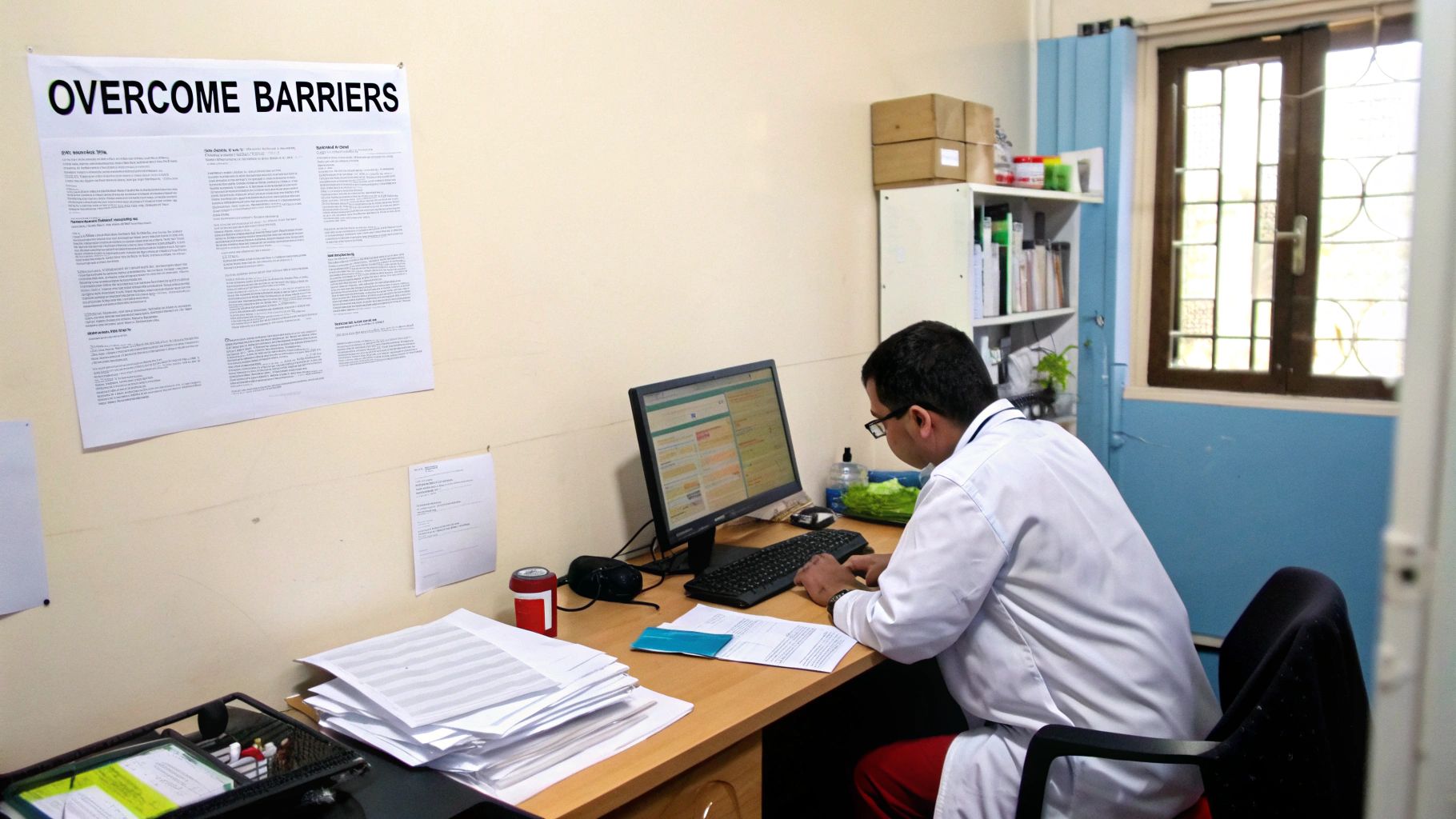

Picture this: a doctor wraps up a full day of seeing patients, only to be greeted by a mountain of clinical notes waiting to be typed. This isn't just a hypothetical scenario—it's the daily reality for countless healthcare professionals and a huge driver of burnout. All those hours spent typing or scribbling notes steal time that could be spent with patients or simply recharging.

This administrative slog is a massive, systemic problem. In fact, physicians often spend about 26.6% of their day on documentation and tack on an extra 1.77 hours after their regular workday just to catch up. While traditional methods like typing or handwriting are still around, voice recognition technology has become a game-changer for speeding up documentation and boosting accuracy. You can learn more about how voice recognition tackles this challenge in healthcare.

A Modern Solution to an Old Problem

This is exactly where voice recognition software steps in to offer a real, practical solution. Instead of being chained to a keyboard, a clinician can just speak their notes as they naturally would, either during or right after a patient visit. The software does the heavy lifting, instantly translating their speech into accurate, structured text that plugs right into the EHR.

It’s like turning the slow, tedious monologue of typing into a dynamic conversation with the patient's chart. This simple change allows clinicians to:

Reclaim Their Time: They can dramatically cut down on those late nights spent finishing up charts.

Improve Patient Focus: It’s easier to maintain eye contact and build rapport when you’re not staring at a screen.

Enhance Note Quality: Providers can capture richer, more detailed patient stories in their own words, without having to summarize on the fly for typing.

By taking over one of the most draining parts of a provider's job, this technology does more than just make things efficient. It helps bring the focus of medicine back to where it belongs: on the patient. It creates a path toward a more sustainable and satisfying way to practice, where technology finally works for the provider, not the other way around.

How Voice Recognition Actually Understands Doctors

It’s easy to think of medical voice recognition as just a fancier version of the speech-to-text on your smartphone. But that's not the whole story. While the basic concept is the same, voice recognition software for healthcare is playing an entirely different game. Your phone’s dictation tool might recognize the word "metformin," but it has no idea it's a diabetes medication or where it belongs in a patient’s chart.

Let's use an analogy. A standard voice app is like someone who can spell words aloud in a language they don't speak—they can repeat the sounds, but there's no comprehension. Healthcare voice recognition, on the other hand, is like a seasoned medical interpreter. It understands the clinical context, the subtle nuances, and what the doctor truly intends to document.

This sophisticated understanding comes from two powerful technologies working in tandem: Natural Language Processing (NLP) and Machine Learning (ML). These are the twin engines that turn a doctor's spoken words into structured, clinically meaningful data. To dig deeper into how these systems work, it's worth exploring the role of Artificial Intelligence in healthcare.

The Power of Natural Language Processing

This is where the real "understanding" happens. Natural Language Processing gives the software the ability to grasp the context of what's being said. It doesn't just process a linear string of words; it analyzes grammar, syntax, and the relationships between medical terms to figure out the doctor's meaning. It's the critical step that separates simple transcription from intelligent interpretation.

For instance, a system with robust NLP can:

Identify Clinical Entities: It knows that "myocardial infarction" is a diagnosis, "lisinopril" is a medication, and "shortness of breath" is a symptom.

Understand Medical Nuance: It can tell the difference between "patient has a family history of hypertension" and "patient is being treated for hypertension." Those are two very different clinical statements.

Structure the Narrative: It intelligently populates the correct fields in an EHR note, putting the right information into the History of Present Illness (HPI) or Assessment and Plan sections automatically.

This ability to parse the often-complex and fragmented way clinicians naturally speak is what makes the technology so incredibly useful in the real world.

Key Takeaway: NLP allows the software to comprehend the meaning behind a doctor's dictation, not just transcribe the words. It essentially reads between the lines to create an accurate and perfectly organized clinical note.

Adapting with Machine Learning

If NLP provides the understanding, Machine Learning provides the intelligence to adapt and get better. ML algorithms allow the software to learn from every single interaction, constantly refining its performance. This is absolutely essential in a field as diverse as medicine.

This ongoing learning process allows the software to adjust to:

Individual Accents and Dialects: It quickly becomes familiar with a specific user’s unique speech patterns, pronunciation, and pacing.

Dictation Styles: The software learns whether a doctor prefers to speak in short, punchy commands or long, flowing sentences.

New Terminology: As new drugs, procedures, and acronyms enter the medical lexicon, the system updates its vocabulary to keep up.

This adaptive intelligence is the secret behind today's top systems achieving accuracy rates of over 99%. The software is a dynamic, evolving tool that gets smarter and more personalized with every use, making it an indispensable partner for any busy clinician.

5 Essential Features of Modern Voice Recognition Tools

When you start looking at voice recognition software for healthcare, you'll quickly realize they aren't all the same. Sure, they all turn speech into text, but the great ones do so much more. They're built from the ground up to handle the unique chaos and complexity of a clinical setting.

Think of it like this: a basic speech-to-text app is a simple calculator. A modern clinical voice recognition system is a full-blown financial modeling spreadsheet. One just crunches numbers; the other helps you make critical decisions, organize data, and see the bigger picture. If you want to get into the nuts and bolts of the AI driving this shift, this guide on voice to text AI is a great place to start.

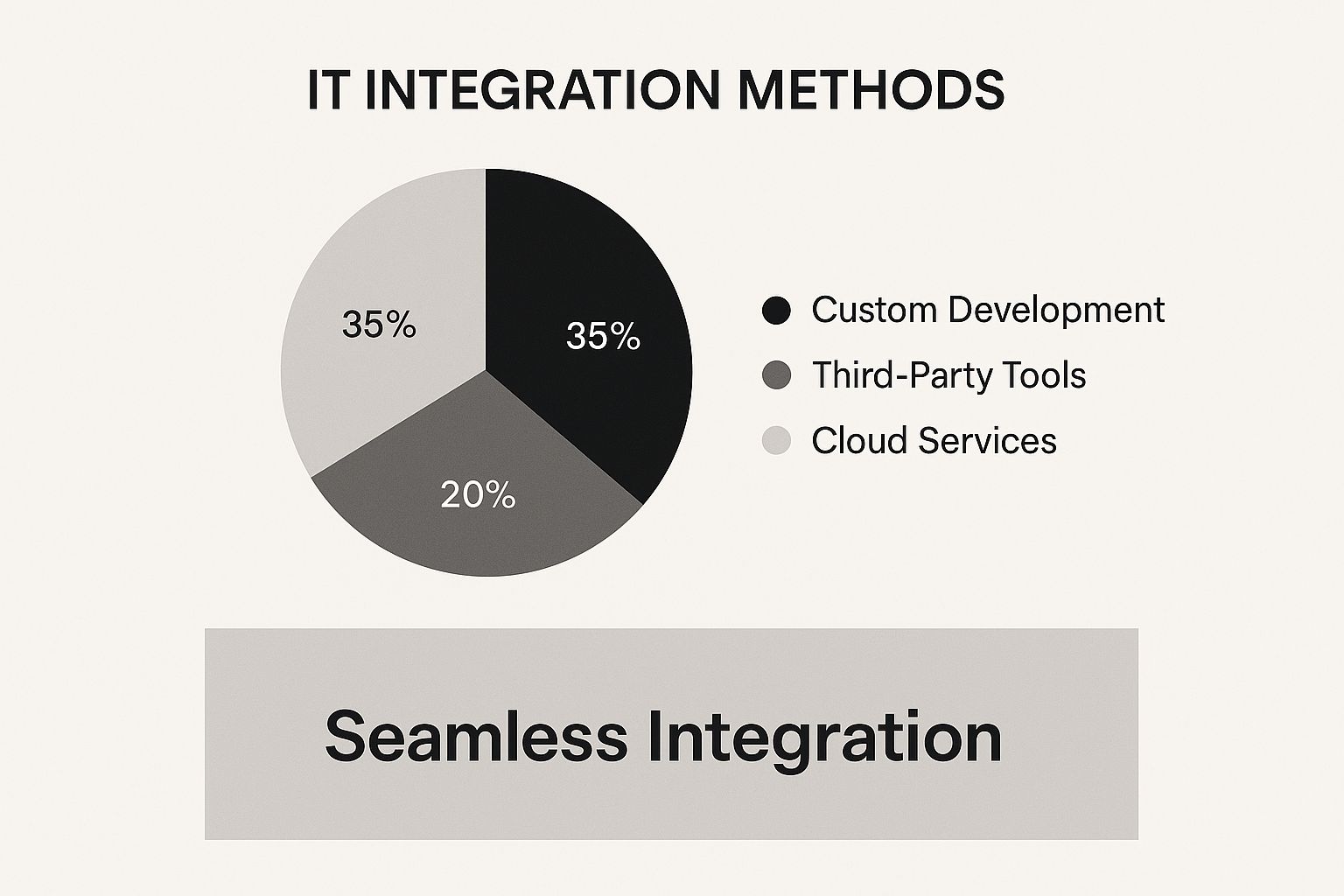

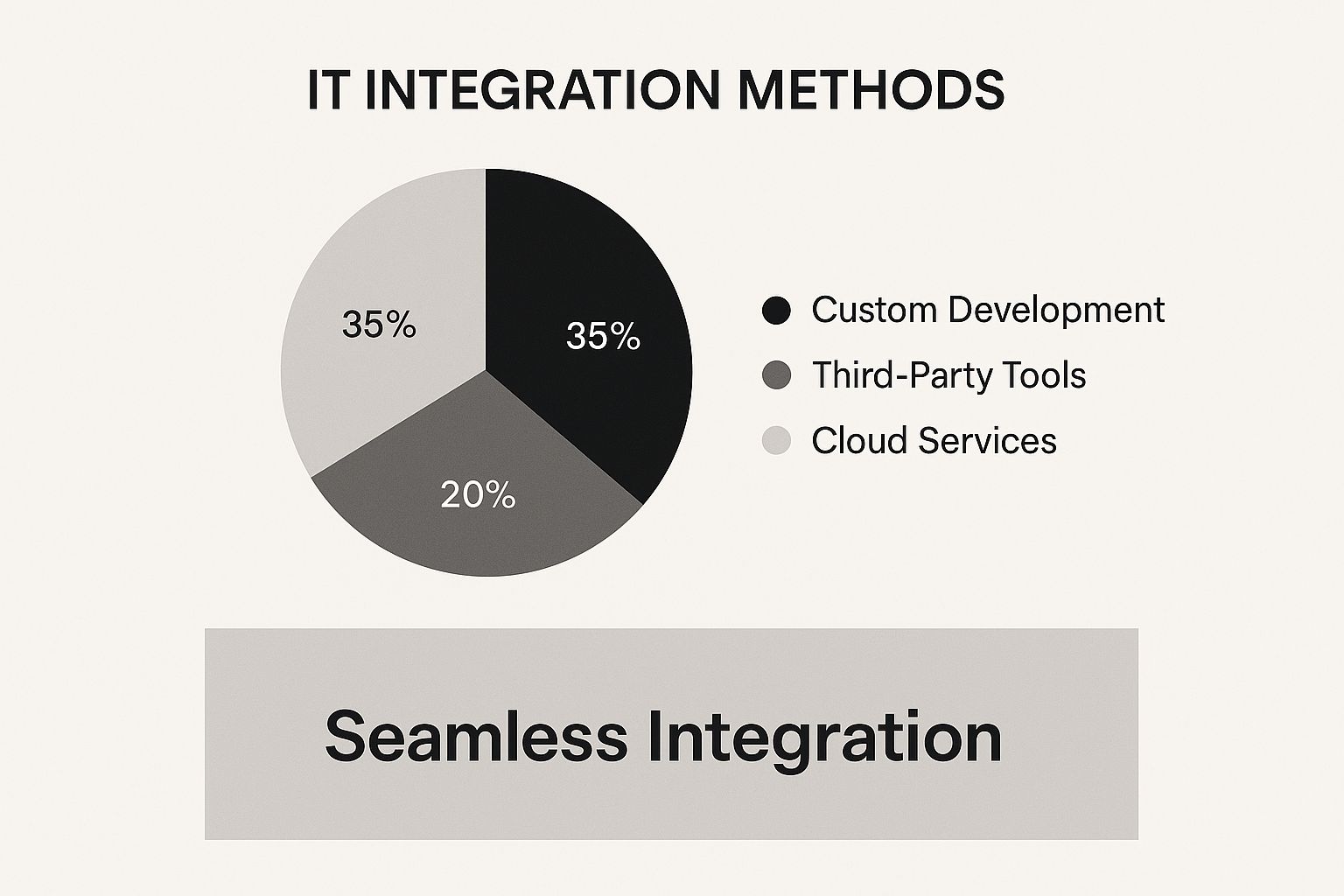

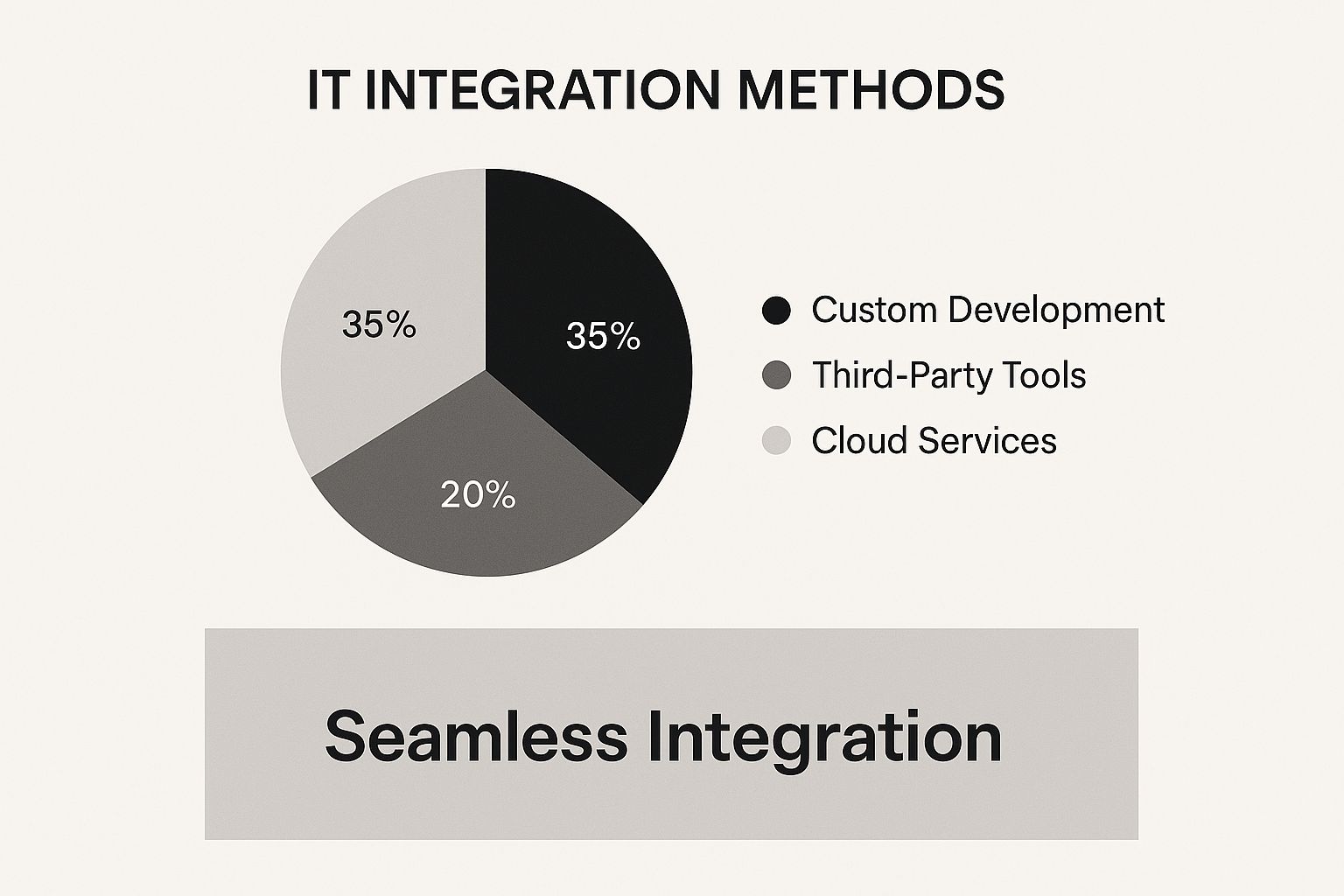

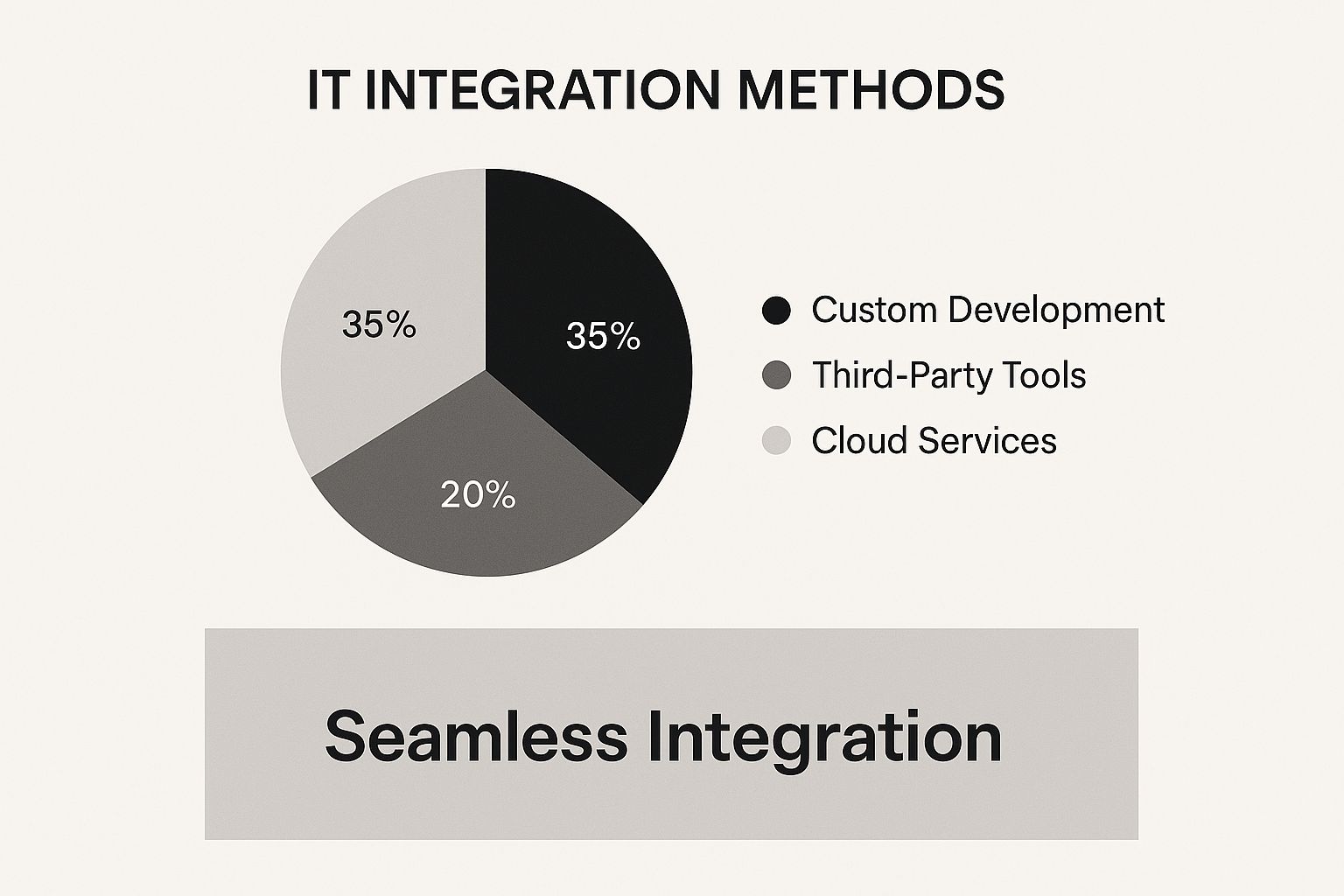

Integration is the name of the game. A tool that can't talk to your other systems is just another silo, and healthcare has enough of those already.

As you can see, seamless workflow with existing tech like your EHR isn't just a "nice-to-have"—it's the whole point.

Front-End vs. Back-End Recognition

One of the first things you'll need to understand is the difference between front-end and back-end voice recognition. It really comes down to a simple question: when does the transcription happen, and who signs off on it?

A lot of practices end up using a mix of both, depending on the department and the doctor's preference. The key is to match the workflow to the clinical need.

The table below breaks down the core differences to help you see which might be a better fit for different scenarios.

Essential Features Comparison Front-End vs Back-End Voice Recognition

Feature | Front-End Recognition | Back-End Recognition |

|---|---|---|

How It Works | Clinician speaks, and words appear on the screen instantly, right in the EHR. | Clinician records audio, which is sent to a server. The text comes back later. |

Best For | Busy clinics, ERs, and situations needing immediate note completion. | Specialties with high-volume, complex reports like radiology or pathology. |

Turnaround Time | Immediate. The note is done and signed in one session. | Delayed. Can range from a few hours to a full day. |

Who Edits? | The clinician self-corrects on the spot. | A medical transcriptionist or editor often reviews and cleans up the draft first. |

Primary Benefit | Speed and control. The provider owns the entire process from start to finish. | Efficiency. The provider dictates and moves on, outsourcing the editing task. |

Ultimately, front-end gives the physician total control for faster sign-offs, while back-end lets them offload the tedious editing work so they can get to the next patient or scan.

Game-Changing Capabilities

Beyond the core transcription engine, the truly powerful systems come with features that feel less like a tool and more like a partner.

Voice commands are a perfect example. Instead of clicking through a dozen menus, a doctor can just say, "Insert normal physical exam template" or "Show me Jane Doe's last labs." It’s about removing friction and keeping the focus on the patient, not the computer screen.

And let's not forget mobile accessibility. Modern medicine doesn't happen at a desk. Clinicians need to capture notes during rounds, between patient rooms, or even from home. A solid mobile app means documentation gets done on the spot, which means fewer forgotten details and more accurate records.

Real-World Impact on Patient Care and Efficiency

It’s one thing to talk about benefits in theory, but the true value of voice recognition software for healthcare really comes alive in the day-to-day work of a clinic or hospital. This is where those efficiency gains stop being just numbers on a page and start translating into better patient outcomes and a more sustainable workload for clinicians. The technology goes from a neat idea to a tool that genuinely changes how work gets done.

Think about a radiologist interpreting a complex MRI. Instead of constantly stopping to type, they can dictate their findings in real-time as they analyze each image, capturing subtle observations while they're still fresh. This not only speeds up the reporting process but also makes sure every critical detail is logged accurately. The end result is a faster and more precise diagnosis.

Or picture a surgeon in the operating room. Post-op notes are essential, but documenting them immediately without breaking sterile protocol is a huge challenge. With voice recognition, the surgeon can dictate their notes right after the procedure is finished, ensuring accuracy and compliance without ever needing to touch a keyboard.

Transforming the Patient Encounter

The primary care setting is where you can see some of the most profound changes. A doctor can carry on a natural conversation during an exam, speaking their notes as they go. This simple act allows them to maintain eye contact and build a real connection with the patient instead of having their back turned to a computer screen.

This shift creates a powerful ripple effect throughout the entire practice:

Less Time on Paperwork: Physicians often complete their notes 30% to 50% faster compared to typing, which is a massive relief in a back-to-back schedule.

Fewer Clerical Errors: When you dictate notes in the moment, you're far less likely to make transcription mistakes or forget important details later on.

Faster Billing Cycles: As soon as notes are finished and signed, the coding and billing process can kick off immediately, eliminating costly delays.

A key clinical study found that modern, cloud-based voice recognition can hit accuracy rates over 90% even with complex medical terminology. This frees up a significant amount of time for clinicians to focus on their patients.

A Clear Return on Investment

Beyond the obvious clinical perks, the boost to morale is impossible to ignore. Taking away a major source of administrative frustration helps physicians feel less overwhelmed and more engaged with the meaningful parts of their job. It's a direct countermeasure to one of the biggest drivers of burnout. Gaining a wider perspective on how medical practices are improving with technology can put these kinds of advancements into context.

At the end of the day, the software pays for itself by turning saved time into better care. Every minute a doctor isn't typing is another minute they can spend listening to a patient, thinking through a diagnosis, or collaborating with colleagues. If you want to dig deeper into the mechanics of this, our guide on https://voicetype.com/blog/speech-to-text-medical-transcription is a great place to start.

Choosing the Right Voice Recognition Partner

Picking voice recognition software for your healthcare practice isn't just about buying a tool. Think of it as bringing on a long-term partner. When you get it right, administrative tasks melt away and clinical workflows just flow. But the wrong choice? That leads to frustrated users, wasted money, and an implementation that never gets off the ground.

The secret is to look past the flashy features and zero in on what actually matters in a busy clinical setting. You need a solution that fits into your existing systems like a puzzle piece, understands complex medical jargon without a hitch, and comes from a vendor who’s truly invested in your team’s success with solid training and support.

This is a big decision, and it’s one that more and more practices are making. The global medical speech recognition market was valued at around USD 1.73 billion in 2024 and is expected to surge to USD 5.58 billion by 2035. This isn't just a trend; it's a fundamental shift in how clinical work gets done. You can dig deeper into the numbers with this global forecast for medical speech recognition.

Key Evaluation Criteria

To make a smart choice, you need to ask the right questions. Your evaluation should be built around a few make-or-break areas. When you're talking to vendors and watching demos, treat it like an interview and push for clear, honest answers on these points.

EHR Integration: How well does the software actually play with your EHR? Don't just take their word for it. Ask for a live demo showing how it fills out fields, moves between screens, and signs off on notes directly within your system.

Medical Vocabulary and Accuracy: Can it keep up with the specific terminology, drug names, and acronyms your specialty uses every day? Any reputable vendor should be able to show you hard data on its accuracy rates with relevant medical dictionaries.

Training and Support: What happens after you sign the contract? Look for vendors who offer a clear onboarding plan, real human support, and a go-to person you can call when things get tricky.

Setting Up a Pilot Program

Brochures and canned demos can only show you so much. The only real way to know if a solution will work for your team is to test it in the wild with a pilot program.

Getting the software into the hands of a small group of clinicians gives you a real-world stress test. They can see how it performs under pressure, providing priceless feedback before you even think about a full rollout.

A successful pilot program should have clear goals: measure time saved on documentation, track user satisfaction, and confirm the software’s accuracy with your providers' dictation styles.

This hands-on approach cuts through the marketing fluff and shows you what you're really getting. If you're just starting to look at your options, our guide on dictation software for medical professionals is a great place to learn what to expect from a top-tier solution.

Got Questions? We've Got Answers

Bringing any new tool into a clinical setting always raises a few critical questions. When it comes to voice recognition software in healthcare, decision-makers and providers alike want to get straight to the point: How well does it actually work, how difficult is it to set up, and is it secure?

Let’s tackle these common concerns head-on.

Will It Understand Different Accents and Complex Medical Terms?

This is usually the first question on everyone's mind. Can the software keep up with the fast-paced, jargon-filled reality of clinical conversations? The short answer is yes. Leading solutions are now hitting over 99% accuracy straight out of the box because they've been trained on enormous databases of real-world clinical language.

But the real magic is in how they learn. The best systems use machine learning to adapt to an individual user's voice—your specific accent, your speaking cadence, your unique phrasing. It gets smarter the more you use it. This is precisely why a general-purpose voice assistant just can't cut it for medical dictation; it hasn't been trained to distinguish between "hypokalemia" and "hyperkalemia" with the same level of precision.

Key Insight: Top-tier medical voice recognition doesn't just start accurate; it's built to become a personalized tool for each clinician, constantly improving to ensure it captures every detail correctly.

What Does the Implementation Actually Look Like?

You might be picturing a long, drawn-out IT project, but modern cloud-based systems are surprisingly quick to get going. Most teams can be up and running in just a few days. The process usually breaks down into three simple stages:

EHR Integration: The software is connected to your existing Electronic Health Record system.

User Setup: Individual voice profiles are created for each doctor, nurse, or therapist.

Onboarding: The vendor provides an initial training session to get everyone comfortable.

While a great partner will offer comprehensive training, the systems themselves are now so intuitive that most clinicians get the hang of it almost immediately. A smart approach is to start with a pilot program in one department. It's a proven way to work out any kinks and ensure a smooth rollout for everyone.

How Does It Handle HIPAA and Keep Patient Data Safe?

In healthcare, data security isn't just a feature—it's everything. Any vendor worth your time has built their platform from the ground up with compliance in mind. This means all patient data is protected with end-to-end encryption, both in transit and at rest.

Reputable providers will operate on secure, HIPAA-compliant cloud infrastructure and will always sign a Business Associate Agreement (BAA), legally binding them to protect patient information. They also include strong access controls and detailed audit trails, giving you full visibility and control over who sees what. It's not just a convenience tool; it's a secure one.

Ready to see how AI-powered dictation can transform your workflow? VoiceType AI helps you write up to nine times faster with 99.7% accuracy, integrating seamlessly with all your applications. Try VoiceType AI for free and reclaim your time.

At its core, voice recognition software for healthcare is a technology that lets clinicians turn their spoken words into text that flows directly into an electronic health record (EHR). It’s essentially a digital scribe that captures everything from clinical notes and patient histories to diagnoses in real time, freeing providers from the endless cycle of manual typing.

Ending the Burden of Clinical Paperwork

Picture this: a doctor wraps up a full day of seeing patients, only to be greeted by a mountain of clinical notes waiting to be typed. This isn't just a hypothetical scenario—it's the daily reality for countless healthcare professionals and a huge driver of burnout. All those hours spent typing or scribbling notes steal time that could be spent with patients or simply recharging.

This administrative slog is a massive, systemic problem. In fact, physicians often spend about 26.6% of their day on documentation and tack on an extra 1.77 hours after their regular workday just to catch up. While traditional methods like typing or handwriting are still around, voice recognition technology has become a game-changer for speeding up documentation and boosting accuracy. You can learn more about how voice recognition tackles this challenge in healthcare.

A Modern Solution to an Old Problem

This is exactly where voice recognition software steps in to offer a real, practical solution. Instead of being chained to a keyboard, a clinician can just speak their notes as they naturally would, either during or right after a patient visit. The software does the heavy lifting, instantly translating their speech into accurate, structured text that plugs right into the EHR.

It’s like turning the slow, tedious monologue of typing into a dynamic conversation with the patient's chart. This simple change allows clinicians to:

Reclaim Their Time: They can dramatically cut down on those late nights spent finishing up charts.

Improve Patient Focus: It’s easier to maintain eye contact and build rapport when you’re not staring at a screen.

Enhance Note Quality: Providers can capture richer, more detailed patient stories in their own words, without having to summarize on the fly for typing.

By taking over one of the most draining parts of a provider's job, this technology does more than just make things efficient. It helps bring the focus of medicine back to where it belongs: on the patient. It creates a path toward a more sustainable and satisfying way to practice, where technology finally works for the provider, not the other way around.

How Voice Recognition Actually Understands Doctors

It’s easy to think of medical voice recognition as just a fancier version of the speech-to-text on your smartphone. But that's not the whole story. While the basic concept is the same, voice recognition software for healthcare is playing an entirely different game. Your phone’s dictation tool might recognize the word "metformin," but it has no idea it's a diabetes medication or where it belongs in a patient’s chart.

Let's use an analogy. A standard voice app is like someone who can spell words aloud in a language they don't speak—they can repeat the sounds, but there's no comprehension. Healthcare voice recognition, on the other hand, is like a seasoned medical interpreter. It understands the clinical context, the subtle nuances, and what the doctor truly intends to document.

This sophisticated understanding comes from two powerful technologies working in tandem: Natural Language Processing (NLP) and Machine Learning (ML). These are the twin engines that turn a doctor's spoken words into structured, clinically meaningful data. To dig deeper into how these systems work, it's worth exploring the role of Artificial Intelligence in healthcare.

The Power of Natural Language Processing

This is where the real "understanding" happens. Natural Language Processing gives the software the ability to grasp the context of what's being said. It doesn't just process a linear string of words; it analyzes grammar, syntax, and the relationships between medical terms to figure out the doctor's meaning. It's the critical step that separates simple transcription from intelligent interpretation.

For instance, a system with robust NLP can:

Identify Clinical Entities: It knows that "myocardial infarction" is a diagnosis, "lisinopril" is a medication, and "shortness of breath" is a symptom.

Understand Medical Nuance: It can tell the difference between "patient has a family history of hypertension" and "patient is being treated for hypertension." Those are two very different clinical statements.

Structure the Narrative: It intelligently populates the correct fields in an EHR note, putting the right information into the History of Present Illness (HPI) or Assessment and Plan sections automatically.

This ability to parse the often-complex and fragmented way clinicians naturally speak is what makes the technology so incredibly useful in the real world.

Key Takeaway: NLP allows the software to comprehend the meaning behind a doctor's dictation, not just transcribe the words. It essentially reads between the lines to create an accurate and perfectly organized clinical note.

Adapting with Machine Learning

If NLP provides the understanding, Machine Learning provides the intelligence to adapt and get better. ML algorithms allow the software to learn from every single interaction, constantly refining its performance. This is absolutely essential in a field as diverse as medicine.

This ongoing learning process allows the software to adjust to:

Individual Accents and Dialects: It quickly becomes familiar with a specific user’s unique speech patterns, pronunciation, and pacing.

Dictation Styles: The software learns whether a doctor prefers to speak in short, punchy commands or long, flowing sentences.

New Terminology: As new drugs, procedures, and acronyms enter the medical lexicon, the system updates its vocabulary to keep up.

This adaptive intelligence is the secret behind today's top systems achieving accuracy rates of over 99%. The software is a dynamic, evolving tool that gets smarter and more personalized with every use, making it an indispensable partner for any busy clinician.

5 Essential Features of Modern Voice Recognition Tools

When you start looking at voice recognition software for healthcare, you'll quickly realize they aren't all the same. Sure, they all turn speech into text, but the great ones do so much more. They're built from the ground up to handle the unique chaos and complexity of a clinical setting.

Think of it like this: a basic speech-to-text app is a simple calculator. A modern clinical voice recognition system is a full-blown financial modeling spreadsheet. One just crunches numbers; the other helps you make critical decisions, organize data, and see the bigger picture. If you want to get into the nuts and bolts of the AI driving this shift, this guide on voice to text AI is a great place to start.

Integration is the name of the game. A tool that can't talk to your other systems is just another silo, and healthcare has enough of those already.

As you can see, seamless workflow with existing tech like your EHR isn't just a "nice-to-have"—it's the whole point.

Front-End vs. Back-End Recognition

One of the first things you'll need to understand is the difference between front-end and back-end voice recognition. It really comes down to a simple question: when does the transcription happen, and who signs off on it?

A lot of practices end up using a mix of both, depending on the department and the doctor's preference. The key is to match the workflow to the clinical need.

The table below breaks down the core differences to help you see which might be a better fit for different scenarios.

Essential Features Comparison Front-End vs Back-End Voice Recognition

Feature | Front-End Recognition | Back-End Recognition |

|---|---|---|

How It Works | Clinician speaks, and words appear on the screen instantly, right in the EHR. | Clinician records audio, which is sent to a server. The text comes back later. |

Best For | Busy clinics, ERs, and situations needing immediate note completion. | Specialties with high-volume, complex reports like radiology or pathology. |

Turnaround Time | Immediate. The note is done and signed in one session. | Delayed. Can range from a few hours to a full day. |

Who Edits? | The clinician self-corrects on the spot. | A medical transcriptionist or editor often reviews and cleans up the draft first. |

Primary Benefit | Speed and control. The provider owns the entire process from start to finish. | Efficiency. The provider dictates and moves on, outsourcing the editing task. |

Ultimately, front-end gives the physician total control for faster sign-offs, while back-end lets them offload the tedious editing work so they can get to the next patient or scan.

Game-Changing Capabilities

Beyond the core transcription engine, the truly powerful systems come with features that feel less like a tool and more like a partner.

Voice commands are a perfect example. Instead of clicking through a dozen menus, a doctor can just say, "Insert normal physical exam template" or "Show me Jane Doe's last labs." It’s about removing friction and keeping the focus on the patient, not the computer screen.

And let's not forget mobile accessibility. Modern medicine doesn't happen at a desk. Clinicians need to capture notes during rounds, between patient rooms, or even from home. A solid mobile app means documentation gets done on the spot, which means fewer forgotten details and more accurate records.

Real-World Impact on Patient Care and Efficiency

It’s one thing to talk about benefits in theory, but the true value of voice recognition software for healthcare really comes alive in the day-to-day work of a clinic or hospital. This is where those efficiency gains stop being just numbers on a page and start translating into better patient outcomes and a more sustainable workload for clinicians. The technology goes from a neat idea to a tool that genuinely changes how work gets done.

Think about a radiologist interpreting a complex MRI. Instead of constantly stopping to type, they can dictate their findings in real-time as they analyze each image, capturing subtle observations while they're still fresh. This not only speeds up the reporting process but also makes sure every critical detail is logged accurately. The end result is a faster and more precise diagnosis.

Or picture a surgeon in the operating room. Post-op notes are essential, but documenting them immediately without breaking sterile protocol is a huge challenge. With voice recognition, the surgeon can dictate their notes right after the procedure is finished, ensuring accuracy and compliance without ever needing to touch a keyboard.

Transforming the Patient Encounter

The primary care setting is where you can see some of the most profound changes. A doctor can carry on a natural conversation during an exam, speaking their notes as they go. This simple act allows them to maintain eye contact and build a real connection with the patient instead of having their back turned to a computer screen.

This shift creates a powerful ripple effect throughout the entire practice:

Less Time on Paperwork: Physicians often complete their notes 30% to 50% faster compared to typing, which is a massive relief in a back-to-back schedule.

Fewer Clerical Errors: When you dictate notes in the moment, you're far less likely to make transcription mistakes or forget important details later on.

Faster Billing Cycles: As soon as notes are finished and signed, the coding and billing process can kick off immediately, eliminating costly delays.

A key clinical study found that modern, cloud-based voice recognition can hit accuracy rates over 90% even with complex medical terminology. This frees up a significant amount of time for clinicians to focus on their patients.

A Clear Return on Investment

Beyond the obvious clinical perks, the boost to morale is impossible to ignore. Taking away a major source of administrative frustration helps physicians feel less overwhelmed and more engaged with the meaningful parts of their job. It's a direct countermeasure to one of the biggest drivers of burnout. Gaining a wider perspective on how medical practices are improving with technology can put these kinds of advancements into context.

At the end of the day, the software pays for itself by turning saved time into better care. Every minute a doctor isn't typing is another minute they can spend listening to a patient, thinking through a diagnosis, or collaborating with colleagues. If you want to dig deeper into the mechanics of this, our guide on https://voicetype.com/blog/speech-to-text-medical-transcription is a great place to start.

Choosing the Right Voice Recognition Partner

Picking voice recognition software for your healthcare practice isn't just about buying a tool. Think of it as bringing on a long-term partner. When you get it right, administrative tasks melt away and clinical workflows just flow. But the wrong choice? That leads to frustrated users, wasted money, and an implementation that never gets off the ground.

The secret is to look past the flashy features and zero in on what actually matters in a busy clinical setting. You need a solution that fits into your existing systems like a puzzle piece, understands complex medical jargon without a hitch, and comes from a vendor who’s truly invested in your team’s success with solid training and support.

This is a big decision, and it’s one that more and more practices are making. The global medical speech recognition market was valued at around USD 1.73 billion in 2024 and is expected to surge to USD 5.58 billion by 2035. This isn't just a trend; it's a fundamental shift in how clinical work gets done. You can dig deeper into the numbers with this global forecast for medical speech recognition.

Key Evaluation Criteria

To make a smart choice, you need to ask the right questions. Your evaluation should be built around a few make-or-break areas. When you're talking to vendors and watching demos, treat it like an interview and push for clear, honest answers on these points.

EHR Integration: How well does the software actually play with your EHR? Don't just take their word for it. Ask for a live demo showing how it fills out fields, moves between screens, and signs off on notes directly within your system.

Medical Vocabulary and Accuracy: Can it keep up with the specific terminology, drug names, and acronyms your specialty uses every day? Any reputable vendor should be able to show you hard data on its accuracy rates with relevant medical dictionaries.

Training and Support: What happens after you sign the contract? Look for vendors who offer a clear onboarding plan, real human support, and a go-to person you can call when things get tricky.

Setting Up a Pilot Program

Brochures and canned demos can only show you so much. The only real way to know if a solution will work for your team is to test it in the wild with a pilot program.

Getting the software into the hands of a small group of clinicians gives you a real-world stress test. They can see how it performs under pressure, providing priceless feedback before you even think about a full rollout.

A successful pilot program should have clear goals: measure time saved on documentation, track user satisfaction, and confirm the software’s accuracy with your providers' dictation styles.

This hands-on approach cuts through the marketing fluff and shows you what you're really getting. If you're just starting to look at your options, our guide on dictation software for medical professionals is a great place to learn what to expect from a top-tier solution.

Got Questions? We've Got Answers

Bringing any new tool into a clinical setting always raises a few critical questions. When it comes to voice recognition software in healthcare, decision-makers and providers alike want to get straight to the point: How well does it actually work, how difficult is it to set up, and is it secure?

Let’s tackle these common concerns head-on.

Will It Understand Different Accents and Complex Medical Terms?

This is usually the first question on everyone's mind. Can the software keep up with the fast-paced, jargon-filled reality of clinical conversations? The short answer is yes. Leading solutions are now hitting over 99% accuracy straight out of the box because they've been trained on enormous databases of real-world clinical language.

But the real magic is in how they learn. The best systems use machine learning to adapt to an individual user's voice—your specific accent, your speaking cadence, your unique phrasing. It gets smarter the more you use it. This is precisely why a general-purpose voice assistant just can't cut it for medical dictation; it hasn't been trained to distinguish between "hypokalemia" and "hyperkalemia" with the same level of precision.

Key Insight: Top-tier medical voice recognition doesn't just start accurate; it's built to become a personalized tool for each clinician, constantly improving to ensure it captures every detail correctly.

What Does the Implementation Actually Look Like?

You might be picturing a long, drawn-out IT project, but modern cloud-based systems are surprisingly quick to get going. Most teams can be up and running in just a few days. The process usually breaks down into three simple stages:

EHR Integration: The software is connected to your existing Electronic Health Record system.

User Setup: Individual voice profiles are created for each doctor, nurse, or therapist.

Onboarding: The vendor provides an initial training session to get everyone comfortable.

While a great partner will offer comprehensive training, the systems themselves are now so intuitive that most clinicians get the hang of it almost immediately. A smart approach is to start with a pilot program in one department. It's a proven way to work out any kinks and ensure a smooth rollout for everyone.

How Does It Handle HIPAA and Keep Patient Data Safe?

In healthcare, data security isn't just a feature—it's everything. Any vendor worth your time has built their platform from the ground up with compliance in mind. This means all patient data is protected with end-to-end encryption, both in transit and at rest.

Reputable providers will operate on secure, HIPAA-compliant cloud infrastructure and will always sign a Business Associate Agreement (BAA), legally binding them to protect patient information. They also include strong access controls and detailed audit trails, giving you full visibility and control over who sees what. It's not just a convenience tool; it's a secure one.

Ready to see how AI-powered dictation can transform your workflow? VoiceType AI helps you write up to nine times faster with 99.7% accuracy, integrating seamlessly with all your applications. Try VoiceType AI for free and reclaim your time.

At its core, voice recognition software for healthcare is a technology that lets clinicians turn their spoken words into text that flows directly into an electronic health record (EHR). It’s essentially a digital scribe that captures everything from clinical notes and patient histories to diagnoses in real time, freeing providers from the endless cycle of manual typing.

Ending the Burden of Clinical Paperwork

Picture this: a doctor wraps up a full day of seeing patients, only to be greeted by a mountain of clinical notes waiting to be typed. This isn't just a hypothetical scenario—it's the daily reality for countless healthcare professionals and a huge driver of burnout. All those hours spent typing or scribbling notes steal time that could be spent with patients or simply recharging.

This administrative slog is a massive, systemic problem. In fact, physicians often spend about 26.6% of their day on documentation and tack on an extra 1.77 hours after their regular workday just to catch up. While traditional methods like typing or handwriting are still around, voice recognition technology has become a game-changer for speeding up documentation and boosting accuracy. You can learn more about how voice recognition tackles this challenge in healthcare.

A Modern Solution to an Old Problem

This is exactly where voice recognition software steps in to offer a real, practical solution. Instead of being chained to a keyboard, a clinician can just speak their notes as they naturally would, either during or right after a patient visit. The software does the heavy lifting, instantly translating their speech into accurate, structured text that plugs right into the EHR.

It’s like turning the slow, tedious monologue of typing into a dynamic conversation with the patient's chart. This simple change allows clinicians to:

Reclaim Their Time: They can dramatically cut down on those late nights spent finishing up charts.

Improve Patient Focus: It’s easier to maintain eye contact and build rapport when you’re not staring at a screen.

Enhance Note Quality: Providers can capture richer, more detailed patient stories in their own words, without having to summarize on the fly for typing.

By taking over one of the most draining parts of a provider's job, this technology does more than just make things efficient. It helps bring the focus of medicine back to where it belongs: on the patient. It creates a path toward a more sustainable and satisfying way to practice, where technology finally works for the provider, not the other way around.

How Voice Recognition Actually Understands Doctors

It’s easy to think of medical voice recognition as just a fancier version of the speech-to-text on your smartphone. But that's not the whole story. While the basic concept is the same, voice recognition software for healthcare is playing an entirely different game. Your phone’s dictation tool might recognize the word "metformin," but it has no idea it's a diabetes medication or where it belongs in a patient’s chart.

Let's use an analogy. A standard voice app is like someone who can spell words aloud in a language they don't speak—they can repeat the sounds, but there's no comprehension. Healthcare voice recognition, on the other hand, is like a seasoned medical interpreter. It understands the clinical context, the subtle nuances, and what the doctor truly intends to document.

This sophisticated understanding comes from two powerful technologies working in tandem: Natural Language Processing (NLP) and Machine Learning (ML). These are the twin engines that turn a doctor's spoken words into structured, clinically meaningful data. To dig deeper into how these systems work, it's worth exploring the role of Artificial Intelligence in healthcare.

The Power of Natural Language Processing

This is where the real "understanding" happens. Natural Language Processing gives the software the ability to grasp the context of what's being said. It doesn't just process a linear string of words; it analyzes grammar, syntax, and the relationships between medical terms to figure out the doctor's meaning. It's the critical step that separates simple transcription from intelligent interpretation.

For instance, a system with robust NLP can:

Identify Clinical Entities: It knows that "myocardial infarction" is a diagnosis, "lisinopril" is a medication, and "shortness of breath" is a symptom.

Understand Medical Nuance: It can tell the difference between "patient has a family history of hypertension" and "patient is being treated for hypertension." Those are two very different clinical statements.

Structure the Narrative: It intelligently populates the correct fields in an EHR note, putting the right information into the History of Present Illness (HPI) or Assessment and Plan sections automatically.

This ability to parse the often-complex and fragmented way clinicians naturally speak is what makes the technology so incredibly useful in the real world.

Key Takeaway: NLP allows the software to comprehend the meaning behind a doctor's dictation, not just transcribe the words. It essentially reads between the lines to create an accurate and perfectly organized clinical note.

Adapting with Machine Learning

If NLP provides the understanding, Machine Learning provides the intelligence to adapt and get better. ML algorithms allow the software to learn from every single interaction, constantly refining its performance. This is absolutely essential in a field as diverse as medicine.

This ongoing learning process allows the software to adjust to:

Individual Accents and Dialects: It quickly becomes familiar with a specific user’s unique speech patterns, pronunciation, and pacing.

Dictation Styles: The software learns whether a doctor prefers to speak in short, punchy commands or long, flowing sentences.

New Terminology: As new drugs, procedures, and acronyms enter the medical lexicon, the system updates its vocabulary to keep up.

This adaptive intelligence is the secret behind today's top systems achieving accuracy rates of over 99%. The software is a dynamic, evolving tool that gets smarter and more personalized with every use, making it an indispensable partner for any busy clinician.

5 Essential Features of Modern Voice Recognition Tools

When you start looking at voice recognition software for healthcare, you'll quickly realize they aren't all the same. Sure, they all turn speech into text, but the great ones do so much more. They're built from the ground up to handle the unique chaos and complexity of a clinical setting.

Think of it like this: a basic speech-to-text app is a simple calculator. A modern clinical voice recognition system is a full-blown financial modeling spreadsheet. One just crunches numbers; the other helps you make critical decisions, organize data, and see the bigger picture. If you want to get into the nuts and bolts of the AI driving this shift, this guide on voice to text AI is a great place to start.

Integration is the name of the game. A tool that can't talk to your other systems is just another silo, and healthcare has enough of those already.

As you can see, seamless workflow with existing tech like your EHR isn't just a "nice-to-have"—it's the whole point.

Front-End vs. Back-End Recognition

One of the first things you'll need to understand is the difference between front-end and back-end voice recognition. It really comes down to a simple question: when does the transcription happen, and who signs off on it?

A lot of practices end up using a mix of both, depending on the department and the doctor's preference. The key is to match the workflow to the clinical need.

The table below breaks down the core differences to help you see which might be a better fit for different scenarios.

Essential Features Comparison Front-End vs Back-End Voice Recognition

Feature | Front-End Recognition | Back-End Recognition |

|---|---|---|

How It Works | Clinician speaks, and words appear on the screen instantly, right in the EHR. | Clinician records audio, which is sent to a server. The text comes back later. |

Best For | Busy clinics, ERs, and situations needing immediate note completion. | Specialties with high-volume, complex reports like radiology or pathology. |

Turnaround Time | Immediate. The note is done and signed in one session. | Delayed. Can range from a few hours to a full day. |

Who Edits? | The clinician self-corrects on the spot. | A medical transcriptionist or editor often reviews and cleans up the draft first. |

Primary Benefit | Speed and control. The provider owns the entire process from start to finish. | Efficiency. The provider dictates and moves on, outsourcing the editing task. |

Ultimately, front-end gives the physician total control for faster sign-offs, while back-end lets them offload the tedious editing work so they can get to the next patient or scan.

Game-Changing Capabilities

Beyond the core transcription engine, the truly powerful systems come with features that feel less like a tool and more like a partner.

Voice commands are a perfect example. Instead of clicking through a dozen menus, a doctor can just say, "Insert normal physical exam template" or "Show me Jane Doe's last labs." It’s about removing friction and keeping the focus on the patient, not the computer screen.

And let's not forget mobile accessibility. Modern medicine doesn't happen at a desk. Clinicians need to capture notes during rounds, between patient rooms, or even from home. A solid mobile app means documentation gets done on the spot, which means fewer forgotten details and more accurate records.

Real-World Impact on Patient Care and Efficiency

It’s one thing to talk about benefits in theory, but the true value of voice recognition software for healthcare really comes alive in the day-to-day work of a clinic or hospital. This is where those efficiency gains stop being just numbers on a page and start translating into better patient outcomes and a more sustainable workload for clinicians. The technology goes from a neat idea to a tool that genuinely changes how work gets done.

Think about a radiologist interpreting a complex MRI. Instead of constantly stopping to type, they can dictate their findings in real-time as they analyze each image, capturing subtle observations while they're still fresh. This not only speeds up the reporting process but also makes sure every critical detail is logged accurately. The end result is a faster and more precise diagnosis.

Or picture a surgeon in the operating room. Post-op notes are essential, but documenting them immediately without breaking sterile protocol is a huge challenge. With voice recognition, the surgeon can dictate their notes right after the procedure is finished, ensuring accuracy and compliance without ever needing to touch a keyboard.

Transforming the Patient Encounter

The primary care setting is where you can see some of the most profound changes. A doctor can carry on a natural conversation during an exam, speaking their notes as they go. This simple act allows them to maintain eye contact and build a real connection with the patient instead of having their back turned to a computer screen.

This shift creates a powerful ripple effect throughout the entire practice:

Less Time on Paperwork: Physicians often complete their notes 30% to 50% faster compared to typing, which is a massive relief in a back-to-back schedule.

Fewer Clerical Errors: When you dictate notes in the moment, you're far less likely to make transcription mistakes or forget important details later on.

Faster Billing Cycles: As soon as notes are finished and signed, the coding and billing process can kick off immediately, eliminating costly delays.

A key clinical study found that modern, cloud-based voice recognition can hit accuracy rates over 90% even with complex medical terminology. This frees up a significant amount of time for clinicians to focus on their patients.

A Clear Return on Investment

Beyond the obvious clinical perks, the boost to morale is impossible to ignore. Taking away a major source of administrative frustration helps physicians feel less overwhelmed and more engaged with the meaningful parts of their job. It's a direct countermeasure to one of the biggest drivers of burnout. Gaining a wider perspective on how medical practices are improving with technology can put these kinds of advancements into context.

At the end of the day, the software pays for itself by turning saved time into better care. Every minute a doctor isn't typing is another minute they can spend listening to a patient, thinking through a diagnosis, or collaborating with colleagues. If you want to dig deeper into the mechanics of this, our guide on https://voicetype.com/blog/speech-to-text-medical-transcription is a great place to start.

Choosing the Right Voice Recognition Partner

Picking voice recognition software for your healthcare practice isn't just about buying a tool. Think of it as bringing on a long-term partner. When you get it right, administrative tasks melt away and clinical workflows just flow. But the wrong choice? That leads to frustrated users, wasted money, and an implementation that never gets off the ground.

The secret is to look past the flashy features and zero in on what actually matters in a busy clinical setting. You need a solution that fits into your existing systems like a puzzle piece, understands complex medical jargon without a hitch, and comes from a vendor who’s truly invested in your team’s success with solid training and support.

This is a big decision, and it’s one that more and more practices are making. The global medical speech recognition market was valued at around USD 1.73 billion in 2024 and is expected to surge to USD 5.58 billion by 2035. This isn't just a trend; it's a fundamental shift in how clinical work gets done. You can dig deeper into the numbers with this global forecast for medical speech recognition.

Key Evaluation Criteria

To make a smart choice, you need to ask the right questions. Your evaluation should be built around a few make-or-break areas. When you're talking to vendors and watching demos, treat it like an interview and push for clear, honest answers on these points.

EHR Integration: How well does the software actually play with your EHR? Don't just take their word for it. Ask for a live demo showing how it fills out fields, moves between screens, and signs off on notes directly within your system.

Medical Vocabulary and Accuracy: Can it keep up with the specific terminology, drug names, and acronyms your specialty uses every day? Any reputable vendor should be able to show you hard data on its accuracy rates with relevant medical dictionaries.

Training and Support: What happens after you sign the contract? Look for vendors who offer a clear onboarding plan, real human support, and a go-to person you can call when things get tricky.

Setting Up a Pilot Program

Brochures and canned demos can only show you so much. The only real way to know if a solution will work for your team is to test it in the wild with a pilot program.

Getting the software into the hands of a small group of clinicians gives you a real-world stress test. They can see how it performs under pressure, providing priceless feedback before you even think about a full rollout.

A successful pilot program should have clear goals: measure time saved on documentation, track user satisfaction, and confirm the software’s accuracy with your providers' dictation styles.

This hands-on approach cuts through the marketing fluff and shows you what you're really getting. If you're just starting to look at your options, our guide on dictation software for medical professionals is a great place to learn what to expect from a top-tier solution.

Got Questions? We've Got Answers

Bringing any new tool into a clinical setting always raises a few critical questions. When it comes to voice recognition software in healthcare, decision-makers and providers alike want to get straight to the point: How well does it actually work, how difficult is it to set up, and is it secure?

Let’s tackle these common concerns head-on.

Will It Understand Different Accents and Complex Medical Terms?

This is usually the first question on everyone's mind. Can the software keep up with the fast-paced, jargon-filled reality of clinical conversations? The short answer is yes. Leading solutions are now hitting over 99% accuracy straight out of the box because they've been trained on enormous databases of real-world clinical language.

But the real magic is in how they learn. The best systems use machine learning to adapt to an individual user's voice—your specific accent, your speaking cadence, your unique phrasing. It gets smarter the more you use it. This is precisely why a general-purpose voice assistant just can't cut it for medical dictation; it hasn't been trained to distinguish between "hypokalemia" and "hyperkalemia" with the same level of precision.

Key Insight: Top-tier medical voice recognition doesn't just start accurate; it's built to become a personalized tool for each clinician, constantly improving to ensure it captures every detail correctly.

What Does the Implementation Actually Look Like?

You might be picturing a long, drawn-out IT project, but modern cloud-based systems are surprisingly quick to get going. Most teams can be up and running in just a few days. The process usually breaks down into three simple stages:

EHR Integration: The software is connected to your existing Electronic Health Record system.

User Setup: Individual voice profiles are created for each doctor, nurse, or therapist.

Onboarding: The vendor provides an initial training session to get everyone comfortable.

While a great partner will offer comprehensive training, the systems themselves are now so intuitive that most clinicians get the hang of it almost immediately. A smart approach is to start with a pilot program in one department. It's a proven way to work out any kinks and ensure a smooth rollout for everyone.

How Does It Handle HIPAA and Keep Patient Data Safe?

In healthcare, data security isn't just a feature—it's everything. Any vendor worth your time has built their platform from the ground up with compliance in mind. This means all patient data is protected with end-to-end encryption, both in transit and at rest.

Reputable providers will operate on secure, HIPAA-compliant cloud infrastructure and will always sign a Business Associate Agreement (BAA), legally binding them to protect patient information. They also include strong access controls and detailed audit trails, giving you full visibility and control over who sees what. It's not just a convenience tool; it's a secure one.

Ready to see how AI-powered dictation can transform your workflow? VoiceType AI helps you write up to nine times faster with 99.7% accuracy, integrating seamlessly with all your applications. Try VoiceType AI for free and reclaim your time.

At its core, voice recognition software for healthcare is a technology that lets clinicians turn their spoken words into text that flows directly into an electronic health record (EHR). It’s essentially a digital scribe that captures everything from clinical notes and patient histories to diagnoses in real time, freeing providers from the endless cycle of manual typing.

Ending the Burden of Clinical Paperwork

Picture this: a doctor wraps up a full day of seeing patients, only to be greeted by a mountain of clinical notes waiting to be typed. This isn't just a hypothetical scenario—it's the daily reality for countless healthcare professionals and a huge driver of burnout. All those hours spent typing or scribbling notes steal time that could be spent with patients or simply recharging.

This administrative slog is a massive, systemic problem. In fact, physicians often spend about 26.6% of their day on documentation and tack on an extra 1.77 hours after their regular workday just to catch up. While traditional methods like typing or handwriting are still around, voice recognition technology has become a game-changer for speeding up documentation and boosting accuracy. You can learn more about how voice recognition tackles this challenge in healthcare.

A Modern Solution to an Old Problem

This is exactly where voice recognition software steps in to offer a real, practical solution. Instead of being chained to a keyboard, a clinician can just speak their notes as they naturally would, either during or right after a patient visit. The software does the heavy lifting, instantly translating their speech into accurate, structured text that plugs right into the EHR.

It’s like turning the slow, tedious monologue of typing into a dynamic conversation with the patient's chart. This simple change allows clinicians to:

Reclaim Their Time: They can dramatically cut down on those late nights spent finishing up charts.

Improve Patient Focus: It’s easier to maintain eye contact and build rapport when you’re not staring at a screen.

Enhance Note Quality: Providers can capture richer, more detailed patient stories in their own words, without having to summarize on the fly for typing.

By taking over one of the most draining parts of a provider's job, this technology does more than just make things efficient. It helps bring the focus of medicine back to where it belongs: on the patient. It creates a path toward a more sustainable and satisfying way to practice, where technology finally works for the provider, not the other way around.

How Voice Recognition Actually Understands Doctors

It’s easy to think of medical voice recognition as just a fancier version of the speech-to-text on your smartphone. But that's not the whole story. While the basic concept is the same, voice recognition software for healthcare is playing an entirely different game. Your phone’s dictation tool might recognize the word "metformin," but it has no idea it's a diabetes medication or where it belongs in a patient’s chart.

Let's use an analogy. A standard voice app is like someone who can spell words aloud in a language they don't speak—they can repeat the sounds, but there's no comprehension. Healthcare voice recognition, on the other hand, is like a seasoned medical interpreter. It understands the clinical context, the subtle nuances, and what the doctor truly intends to document.

This sophisticated understanding comes from two powerful technologies working in tandem: Natural Language Processing (NLP) and Machine Learning (ML). These are the twin engines that turn a doctor's spoken words into structured, clinically meaningful data. To dig deeper into how these systems work, it's worth exploring the role of Artificial Intelligence in healthcare.

The Power of Natural Language Processing

This is where the real "understanding" happens. Natural Language Processing gives the software the ability to grasp the context of what's being said. It doesn't just process a linear string of words; it analyzes grammar, syntax, and the relationships between medical terms to figure out the doctor's meaning. It's the critical step that separates simple transcription from intelligent interpretation.

For instance, a system with robust NLP can:

Identify Clinical Entities: It knows that "myocardial infarction" is a diagnosis, "lisinopril" is a medication, and "shortness of breath" is a symptom.

Understand Medical Nuance: It can tell the difference between "patient has a family history of hypertension" and "patient is being treated for hypertension." Those are two very different clinical statements.

Structure the Narrative: It intelligently populates the correct fields in an EHR note, putting the right information into the History of Present Illness (HPI) or Assessment and Plan sections automatically.

This ability to parse the often-complex and fragmented way clinicians naturally speak is what makes the technology so incredibly useful in the real world.

Key Takeaway: NLP allows the software to comprehend the meaning behind a doctor's dictation, not just transcribe the words. It essentially reads between the lines to create an accurate and perfectly organized clinical note.

Adapting with Machine Learning

If NLP provides the understanding, Machine Learning provides the intelligence to adapt and get better. ML algorithms allow the software to learn from every single interaction, constantly refining its performance. This is absolutely essential in a field as diverse as medicine.

This ongoing learning process allows the software to adjust to:

Individual Accents and Dialects: It quickly becomes familiar with a specific user’s unique speech patterns, pronunciation, and pacing.

Dictation Styles: The software learns whether a doctor prefers to speak in short, punchy commands or long, flowing sentences.

New Terminology: As new drugs, procedures, and acronyms enter the medical lexicon, the system updates its vocabulary to keep up.

This adaptive intelligence is the secret behind today's top systems achieving accuracy rates of over 99%. The software is a dynamic, evolving tool that gets smarter and more personalized with every use, making it an indispensable partner for any busy clinician.

5 Essential Features of Modern Voice Recognition Tools

When you start looking at voice recognition software for healthcare, you'll quickly realize they aren't all the same. Sure, they all turn speech into text, but the great ones do so much more. They're built from the ground up to handle the unique chaos and complexity of a clinical setting.

Think of it like this: a basic speech-to-text app is a simple calculator. A modern clinical voice recognition system is a full-blown financial modeling spreadsheet. One just crunches numbers; the other helps you make critical decisions, organize data, and see the bigger picture. If you want to get into the nuts and bolts of the AI driving this shift, this guide on voice to text AI is a great place to start.

Integration is the name of the game. A tool that can't talk to your other systems is just another silo, and healthcare has enough of those already.

As you can see, seamless workflow with existing tech like your EHR isn't just a "nice-to-have"—it's the whole point.

Front-End vs. Back-End Recognition

One of the first things you'll need to understand is the difference between front-end and back-end voice recognition. It really comes down to a simple question: when does the transcription happen, and who signs off on it?

A lot of practices end up using a mix of both, depending on the department and the doctor's preference. The key is to match the workflow to the clinical need.

The table below breaks down the core differences to help you see which might be a better fit for different scenarios.

Essential Features Comparison Front-End vs Back-End Voice Recognition

Feature | Front-End Recognition | Back-End Recognition |

|---|---|---|

How It Works | Clinician speaks, and words appear on the screen instantly, right in the EHR. | Clinician records audio, which is sent to a server. The text comes back later. |

Best For | Busy clinics, ERs, and situations needing immediate note completion. | Specialties with high-volume, complex reports like radiology or pathology. |

Turnaround Time | Immediate. The note is done and signed in one session. | Delayed. Can range from a few hours to a full day. |

Who Edits? | The clinician self-corrects on the spot. | A medical transcriptionist or editor often reviews and cleans up the draft first. |

Primary Benefit | Speed and control. The provider owns the entire process from start to finish. | Efficiency. The provider dictates and moves on, outsourcing the editing task. |

Ultimately, front-end gives the physician total control for faster sign-offs, while back-end lets them offload the tedious editing work so they can get to the next patient or scan.

Game-Changing Capabilities

Beyond the core transcription engine, the truly powerful systems come with features that feel less like a tool and more like a partner.

Voice commands are a perfect example. Instead of clicking through a dozen menus, a doctor can just say, "Insert normal physical exam template" or "Show me Jane Doe's last labs." It’s about removing friction and keeping the focus on the patient, not the computer screen.

And let's not forget mobile accessibility. Modern medicine doesn't happen at a desk. Clinicians need to capture notes during rounds, between patient rooms, or even from home. A solid mobile app means documentation gets done on the spot, which means fewer forgotten details and more accurate records.

Real-World Impact on Patient Care and Efficiency

It’s one thing to talk about benefits in theory, but the true value of voice recognition software for healthcare really comes alive in the day-to-day work of a clinic or hospital. This is where those efficiency gains stop being just numbers on a page and start translating into better patient outcomes and a more sustainable workload for clinicians. The technology goes from a neat idea to a tool that genuinely changes how work gets done.

Think about a radiologist interpreting a complex MRI. Instead of constantly stopping to type, they can dictate their findings in real-time as they analyze each image, capturing subtle observations while they're still fresh. This not only speeds up the reporting process but also makes sure every critical detail is logged accurately. The end result is a faster and more precise diagnosis.

Or picture a surgeon in the operating room. Post-op notes are essential, but documenting them immediately without breaking sterile protocol is a huge challenge. With voice recognition, the surgeon can dictate their notes right after the procedure is finished, ensuring accuracy and compliance without ever needing to touch a keyboard.

Transforming the Patient Encounter

The primary care setting is where you can see some of the most profound changes. A doctor can carry on a natural conversation during an exam, speaking their notes as they go. This simple act allows them to maintain eye contact and build a real connection with the patient instead of having their back turned to a computer screen.

This shift creates a powerful ripple effect throughout the entire practice:

Less Time on Paperwork: Physicians often complete their notes 30% to 50% faster compared to typing, which is a massive relief in a back-to-back schedule.

Fewer Clerical Errors: When you dictate notes in the moment, you're far less likely to make transcription mistakes or forget important details later on.

Faster Billing Cycles: As soon as notes are finished and signed, the coding and billing process can kick off immediately, eliminating costly delays.

A key clinical study found that modern, cloud-based voice recognition can hit accuracy rates over 90% even with complex medical terminology. This frees up a significant amount of time for clinicians to focus on their patients.

A Clear Return on Investment

Beyond the obvious clinical perks, the boost to morale is impossible to ignore. Taking away a major source of administrative frustration helps physicians feel less overwhelmed and more engaged with the meaningful parts of their job. It's a direct countermeasure to one of the biggest drivers of burnout. Gaining a wider perspective on how medical practices are improving with technology can put these kinds of advancements into context.

At the end of the day, the software pays for itself by turning saved time into better care. Every minute a doctor isn't typing is another minute they can spend listening to a patient, thinking through a diagnosis, or collaborating with colleagues. If you want to dig deeper into the mechanics of this, our guide on https://voicetype.com/blog/speech-to-text-medical-transcription is a great place to start.

Choosing the Right Voice Recognition Partner

Picking voice recognition software for your healthcare practice isn't just about buying a tool. Think of it as bringing on a long-term partner. When you get it right, administrative tasks melt away and clinical workflows just flow. But the wrong choice? That leads to frustrated users, wasted money, and an implementation that never gets off the ground.

The secret is to look past the flashy features and zero in on what actually matters in a busy clinical setting. You need a solution that fits into your existing systems like a puzzle piece, understands complex medical jargon without a hitch, and comes from a vendor who’s truly invested in your team’s success with solid training and support.

This is a big decision, and it’s one that more and more practices are making. The global medical speech recognition market was valued at around USD 1.73 billion in 2024 and is expected to surge to USD 5.58 billion by 2035. This isn't just a trend; it's a fundamental shift in how clinical work gets done. You can dig deeper into the numbers with this global forecast for medical speech recognition.

Key Evaluation Criteria

To make a smart choice, you need to ask the right questions. Your evaluation should be built around a few make-or-break areas. When you're talking to vendors and watching demos, treat it like an interview and push for clear, honest answers on these points.

EHR Integration: How well does the software actually play with your EHR? Don't just take their word for it. Ask for a live demo showing how it fills out fields, moves between screens, and signs off on notes directly within your system.

Medical Vocabulary and Accuracy: Can it keep up with the specific terminology, drug names, and acronyms your specialty uses every day? Any reputable vendor should be able to show you hard data on its accuracy rates with relevant medical dictionaries.

Training and Support: What happens after you sign the contract? Look for vendors who offer a clear onboarding plan, real human support, and a go-to person you can call when things get tricky.

Setting Up a Pilot Program

Brochures and canned demos can only show you so much. The only real way to know if a solution will work for your team is to test it in the wild with a pilot program.

Getting the software into the hands of a small group of clinicians gives you a real-world stress test. They can see how it performs under pressure, providing priceless feedback before you even think about a full rollout.

A successful pilot program should have clear goals: measure time saved on documentation, track user satisfaction, and confirm the software’s accuracy with your providers' dictation styles.

This hands-on approach cuts through the marketing fluff and shows you what you're really getting. If you're just starting to look at your options, our guide on dictation software for medical professionals is a great place to learn what to expect from a top-tier solution.

Got Questions? We've Got Answers

Bringing any new tool into a clinical setting always raises a few critical questions. When it comes to voice recognition software in healthcare, decision-makers and providers alike want to get straight to the point: How well does it actually work, how difficult is it to set up, and is it secure?

Let’s tackle these common concerns head-on.

Will It Understand Different Accents and Complex Medical Terms?

This is usually the first question on everyone's mind. Can the software keep up with the fast-paced, jargon-filled reality of clinical conversations? The short answer is yes. Leading solutions are now hitting over 99% accuracy straight out of the box because they've been trained on enormous databases of real-world clinical language.

But the real magic is in how they learn. The best systems use machine learning to adapt to an individual user's voice—your specific accent, your speaking cadence, your unique phrasing. It gets smarter the more you use it. This is precisely why a general-purpose voice assistant just can't cut it for medical dictation; it hasn't been trained to distinguish between "hypokalemia" and "hyperkalemia" with the same level of precision.

Key Insight: Top-tier medical voice recognition doesn't just start accurate; it's built to become a personalized tool for each clinician, constantly improving to ensure it captures every detail correctly.

What Does the Implementation Actually Look Like?

You might be picturing a long, drawn-out IT project, but modern cloud-based systems are surprisingly quick to get going. Most teams can be up and running in just a few days. The process usually breaks down into three simple stages:

EHR Integration: The software is connected to your existing Electronic Health Record system.

User Setup: Individual voice profiles are created for each doctor, nurse, or therapist.

Onboarding: The vendor provides an initial training session to get everyone comfortable.

While a great partner will offer comprehensive training, the systems themselves are now so intuitive that most clinicians get the hang of it almost immediately. A smart approach is to start with a pilot program in one department. It's a proven way to work out any kinks and ensure a smooth rollout for everyone.

How Does It Handle HIPAA and Keep Patient Data Safe?

In healthcare, data security isn't just a feature—it's everything. Any vendor worth your time has built their platform from the ground up with compliance in mind. This means all patient data is protected with end-to-end encryption, both in transit and at rest.

Reputable providers will operate on secure, HIPAA-compliant cloud infrastructure and will always sign a Business Associate Agreement (BAA), legally binding them to protect patient information. They also include strong access controls and detailed audit trails, giving you full visibility and control over who sees what. It's not just a convenience tool; it's a secure one.

Ready to see how AI-powered dictation can transform your workflow? VoiceType AI helps you write up to nine times faster with 99.7% accuracy, integrating seamlessly with all your applications. Try VoiceType AI for free and reclaim your time.